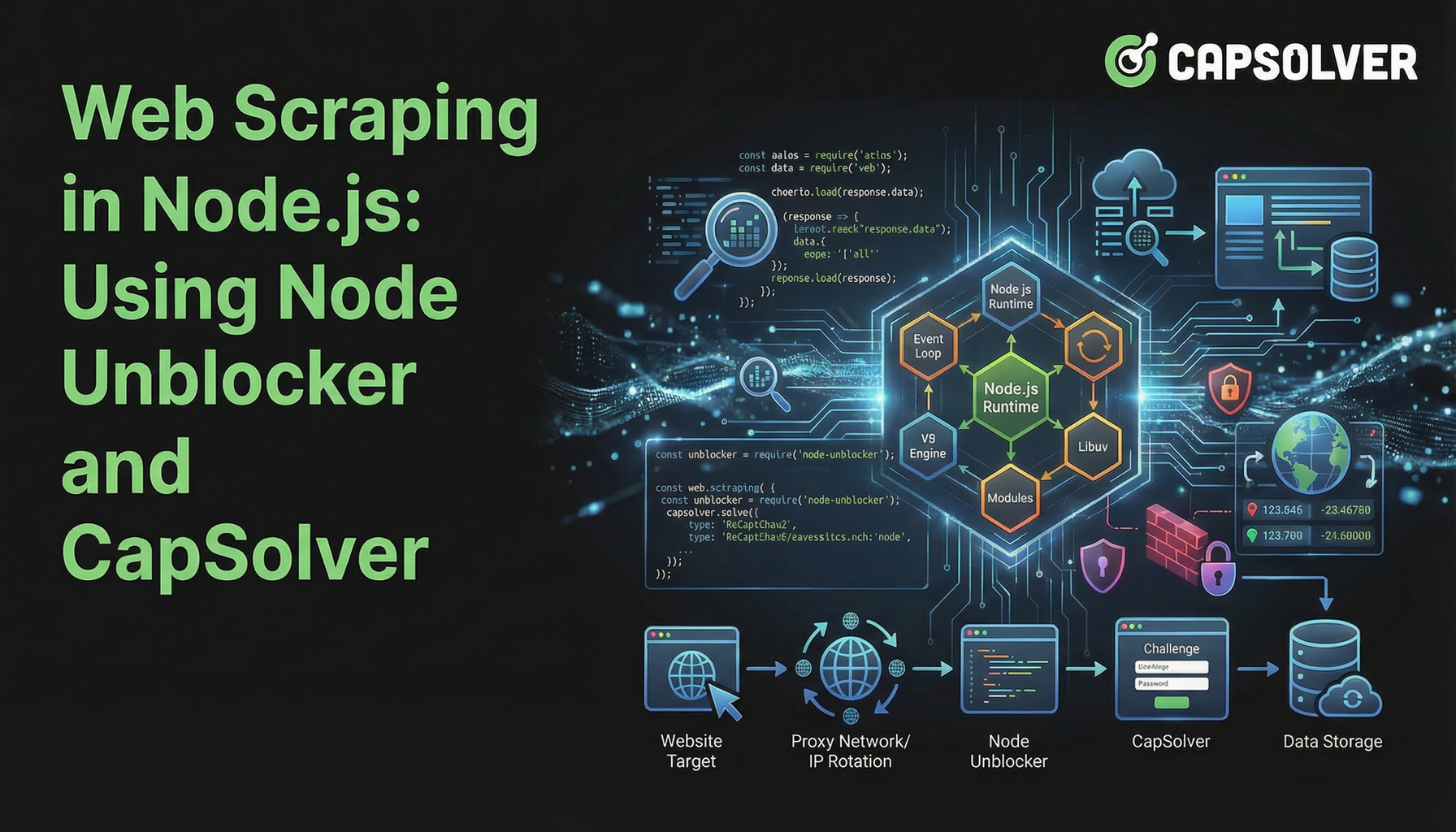

Web Scraping in Node.js: Using Node Unblocker and CapSolver

Nikolai Smirnov

Software Development Lead

03-Feb-2026

TL;Dr

- Web scraping in Node.js faces increasing challenges from sophisticated bot detection and CAPTCHAs.

- Node Unblocker effectively handles basic anti-scraping measures like IP blocking and geo-restrictions by acting as a proxy middleware.

- CapSolver is essential for overcoming advanced challenges, specifically CAPTCHAs, which Node Unblocker cannot address alone.

- Combining Node Unblocker with CapSolver creates a robust and efficient web scraping in Node.js solution.

- Proper integration of these tools ensures reliable data extraction from complex websites.

Introduction

Web scraping in Node.js has become a powerful technique for data collection, but it often encounters significant hurdles. Websites increasingly deploy advanced defenses to prevent automated access, making successful data extraction a complex task. This article explores how to enhance your web scraping in Node.js projects by combining Node Unblocker, a versatile proxy middleware, with CapSolver, a specialized CAPTCHA-solving service. We will guide you through building a resilient scraping infrastructure that can navigate common web restrictions and ensure consistent data flow. This guide is for developers seeking efficient and reliable methods for web scraping in Node.js in today's challenging online environment.

Understanding the Landscape of Web Scraping Challenges

Modern websites employ various techniques to deter automated scraping efforts. These defenses range from simple IP blocking to complex interactive challenges. Successfully performing web scraping in Node.js requires understanding and addressing these obstacles.

Common challenges include:

- IP-based Blocking: Websites detect and block requests originating from suspicious IP addresses, often associated with data centers or known scraping activities.

- Rate Limiting: Servers restrict the number of requests from a single IP within a given timeframe, leading to temporary blocks or errors.

- Geo-restrictions: Content availability varies by geographical location, preventing access to certain data from specific regions.

- CAPTCHAs: These are designed to distinguish human users from bots, presenting visual or interactive puzzles that are difficult for automated scripts to solve.

- Dynamic Content: Websites rendering content with JavaScript require scrapers to execute JavaScript, adding complexity.

- Session Management: Maintaining session state and handling cookies correctly is crucial for navigating authenticated sections of websites.

These challenges highlight the need for sophisticated tools beyond basic HTTP request libraries when engaging in serious web scraping in Node.js.

Node Unblocker: A Foundation for Resilient Scraping

Node Unblocker is an open-source Node.js middleware designed to facilitate web scraping in Node.js by circumventing common web restrictions. It acts as a proxy, routing your requests through an intermediary server, thereby masking your original IP address and potentially bypassing geo-blocks. Its primary strength lies in its ability to modify request and response headers, handle cookies, and manage sessions, making it a valuable asset for initial defense layers.

Key Advantages of Node Unblocker:

- IP Masking: Routes traffic through a proxy, hiding your scraper's real IP address. This helps in avoiding IP-based blocks.

- Geo-restriction Circumvention: By using proxies located in different regions, you can access content that is geographically restricted.

- Header Management: Allows easy modification of HTTP headers, such as User-Agent, Referer, and Accept-Language, to mimic legitimate browser requests.

- Cookie Handling: Automatically manages cookies, essential for maintaining session state across multiple requests.

- Middleware Integration: Designed to integrate seamlessly with popular Node.js web frameworks like Express.js, simplifying setup and usage.

- Open-Source Flexibility: Being open-source, it offers full control and customization options for developers to tailor it to specific scraping needs.

Setting Up Node Unblocker for Web Scraping in Node.js

Integrating Node Unblocker into your web scraping in Node.js project is straightforward. First, ensure you have Node.js and npm installed. Then, you can install Node Unblocker and Express.js:

bash

npm init -y

npm install express unblockerNext, create an index.js file and configure Node Unblocker as middleware:

javascript

const express = require("express");

const Unblocker = require("unblocker");

const app = express();

const unblocker = new Unblocker({ prefix: "/proxy/" });

app.use(unblocker);

const port = 3000;

app.listen(port).on("upgrade", unblocker.onUpgrade);

console.log(`Proxy running on http://localhost:${port}/proxy/`);This basic setup creates a local proxy server. You can then route your scraping requests through http://localhost:3000/proxy/ followed by the target URL. For more detailed configuration, refer to the Node Unblocker GitHub repository.

The Missing Piece: Solving CAPTCHAs with CapSolver

While Node Unblocker excels at handling network-level restrictions, it does not address challenges like CAPTCHAs. These visual or interactive puzzles are specifically designed to differentiate human users from automated scripts. When your web scraping in Node.js encounters a CAPTCHA, the scraping process grinds to a halt.

This is where CapSolver becomes an indispensable tool. CapSolver is a specialized CAPTCHA-solving service that provides an API to programmatically solve various types of CAPTCHAs, including reCAPTCHA v2, reCAPTCHA v3, and Cloudflare Turnstile. Integrating CapSolver into your web scraping in Node.js workflow allows your scraper to automatically overcome these human verification steps, ensuring uninterrupted data collection.

Use code

CAP26when signing up at CapSolver to receive bonus credits!

How CapSolver Enhances Web Scraping in Node.js:

- Automated CAPTCHA Resolution: Solves complex CAPTCHAs without manual intervention.

- Wide CAPTCHA Support: Handles various CAPTCHA types, offering a comprehensive solution.

- API Integration: Provides a straightforward API for easy integration into existing Node.js projects.

- Reliability: Offers high success rates for CAPTCHA solving, minimizing interruptions.

- Speed: Delivers fast CAPTCHA solutions, maintaining the efficiency of your scraping operations.

Integrating CapSolver into Your Node.js Scraper

To integrate CapSolver, you would typically make an API call to CapSolver whenever a CAPTCHA is detected. The process involves sending the CAPTCHA details to CapSolver, receiving the solution, and then submitting that solution back to the target website. This can be done using an HTTP client like Axios in your Node.js application.

For example, after setting up your Node Unblocker proxy, your scraping logic would include a check for CAPTCHAs. If one is found, you would initiate a call to CapSolver. You can find detailed examples and documentation on how to integrate CapSolver for various CAPTCHA types in our articles, such as How to Solve reCAPTCHA with Node.js and How to solve Cloudflare Turnstile Captcha with NodeJS.

Comparison: Node Unblocker Alone vs. Node Unblocker + CapSolver

Understanding the distinct roles of Node Unblocker and CapSolver is crucial for effective web scraping in Node.js. While Node Unblocker provides foundational proxy capabilities, CapSolver addresses a specific, advanced challenge.

| Feature/Tool | Node Unblocker Alone | Node Unblocker + CapSolver |

|---|---|---|

| IP Masking | Yes | Yes |

| Geo-restriction Circumvention | Yes | Yes |

| Header/Cookie Management | Yes | Yes |

| CAPTCHA Resolution | No | Yes |

| Bot Detection (Basic) | Partial (via IP/header changes) | Enhanced (solves CAPTCHAs, reducing bot scores) |

| Complexity of Setup | Moderate | Moderate to High (requires CapSolver API integration) |

| Cost | Free (open-source) | Free (open-source) + CapSolver service fees |

| Reliability for Complex Sites | Limited | High |

| Ideal Use Case | Simple sites, basic data collection, initial testing | Complex sites with CAPTCHAs, large-scale data extraction, production environments |

This comparison clearly shows that for robust web scraping in Node.js against modern web defenses, a combined approach is superior. Node Unblocker handles the routing and basic evasion, while CapSolver provides the intelligence to overcome CAPTCHAs.

Advanced Strategies for Web Scraping in Node.js

Beyond just using Node Unblocker and CapSolver, several advanced strategies can further enhance your web scraping in Node.js projects. These techniques focus on mimicking human behavior and managing resources efficiently.

- User-Agent Rotation: Regularly changing the User-Agent header helps avoid detection. A diverse pool of User-Agents makes your requests appear to come from different browsers and devices. Learn more about managing user agents in our article on Best User-Agent.

- Request Delay and Randomization: Introducing random delays between requests prevents your scraper from hitting rate limits. Human browsing patterns are rarely perfectly consistent.

- Headless Browsers: For websites heavily reliant on JavaScript, using headless browsers like Puppeteer or Playwright is essential. These tools can execute JavaScript and render pages just like a real browser. You can integrate CapSolver with these tools; see our guides on How to Integrate Puppeteer and How to Integrate Playwright.

- Proxy Rotation: While Node Unblocker provides a single proxy layer, rotating through a pool of different proxies (residential, mobile) can significantly reduce the chances of IP blocking. This is especially important for large-scale web scraping in Node.js operations.

- Error Handling and Retries: Implement robust error handling with retry mechanisms for failed requests. This makes your scraper more resilient to temporary network issues or soft blocks.

By combining these strategies with Node Unblocker and CapSolver, you build a highly sophisticated and effective web scraping in Node.js solution. For more general tips on avoiding detection, refer to our article on Avoiding IP Bans.

Conclusion

Effective web scraping in Node.js in 2026 demands a multi-faceted approach to overcome increasingly complex web defenses. Node Unblocker provides a robust open-source foundation for managing proxy connections, masking IPs, and handling basic HTTP intricacies. However, for the most challenging obstacles, particularly CAPTCHAs, a specialized service like CapSolver is indispensable. The synergy between Node Unblocker and CapSolver creates a powerful and reliable scraping infrastructure, enabling developers to extract data consistently and efficiently.

By integrating these tools and adopting advanced scraping strategies, you can build resilient web scraping in Node.js applications that stand up to modern bot detection mechanisms. Equip your projects with the right combination of tools to ensure your data collection efforts are successful and sustainable.

Frequently Asked Questions (FAQ)

Q: What is Node Unblocker used for in web scraping?

A: Node Unblocker is primarily used as a proxy middleware in web scraping in Node.js to mask the scraper's IP address, circumvent geo-restrictions, and manage HTTP headers and cookies. It helps in bypassing basic anti-scraping measures and making requests appear more legitimate.

Q: Can Node Unblocker solve CAPTCHAs?

A: No, Node Unblocker itself cannot solve CAPTCHAs. Its functionality is focused on network-level proxying and request modification. To solve CAPTCHAs encountered during web scraping in Node.js, you need to integrate a specialized CAPTCHA-solving service like CapSolver.

Q: Why should I use CapSolver with Node Unblocker?

A: You should use CapSolver with Node Unblocker to create a comprehensive web scraping in Node.js solution. Node Unblocker handles IP masking and basic evasion, while CapSolver provides the crucial ability to automatically solve CAPTCHAs, which are a common roadblock for automated scrapers on protected websites.

Q: Are there any alternatives to Node Unblocker for proxy management?

A: Yes, there are several alternatives for proxy management in web scraping in Node.js, including custom proxy rotation scripts, commercial proxy services, or other open-source libraries. However, Node Unblocker offers a convenient middleware approach for Express.js applications.

Q: What are the legal considerations for web scraping?

A: Legal considerations for web scraping in Node.js include respecting robots.txt files, adhering to website terms of service, and complying with data protection regulations like GDPR or CCPA. Always ensure your scraping activities are ethical and legal.

Compliance Disclaimer: The information provided on this blog is for informational purposes only. CapSolver is committed to compliance with all applicable laws and regulations. The use of the CapSolver network for illegal, fraudulent, or abusive activities is strictly prohibited and will be investigated. Our captcha-solving solutions enhance user experience while ensuring 100% compliance in helping solve captcha difficulties during public data crawling. We encourage responsible use of our services. For more information, please visit our Terms of Service and Privacy Policy.

More

How to Solve Captcha with Nanobrowser and CapSolver Integration

Solve reCAPTCHA and Cloudflare Turnstile automatically by integrating Nanobrowser with CapSolver for seamless AI automation.

Ethan Collins

04-Feb-2026

How to Solve Captcha in RoxyBrowser with CapSolver Integration

Integrate CapSolver with RoxyBrowser to automate browser tasks and bypass reCAPTCHA, Turnstile, and other CAPTCHAs.

Lucas Mitchell

04-Feb-2026

How to Solve Captcha in EasySpider with CapSolver Integration

EasySpider is a visual, no-code web scraping and browser automation tool, and when combined with CapSolver, it can reliably solve CAPTCHAs like reCAPTCHA v2 and Cloudflare Turnstile, enabling seamless automated data extraction across websites.

Lucas Mitchell

04-Feb-2026

How to Solve reCAPTCHA v2 in Relevance AI with CapSolver Integration

Build a Relevance AI tool to solve reCAPTCHA v2 using CapSolver. Automate form submissions via API without browser automation.

Lucas Mitchell

03-Feb-2026

Web Scraping in Node.js: Using Node Unblocker and CapSolver

Master web scraping in Node.js using Node Unblocker to bypass restrictions and CapSolver to solve CAPTCHAs. This guide provides advanced strategies for efficient and reliable data extraction.

Nikolai Smirnov

03-Feb-2026

Instant Data Scraper Tools: Fast Ways to Extract Web Data Without Code

Discover the best instant data scraper tools for 2026. Learn fast ways to extract web data without code using top extensions and APIs for automated extraction.

Emma Foster

27-Jan-2026