网络爬虫的挑战与如何解决

Ethan Collins

Pattern Recognition Specialist

28-Oct-2025

互联网是一个庞大的数据仓库,但挖掘其真正潜力可能颇具挑战性。无论是处理非结构化数据,还是应对网站施加的限制,或是遇到各种障碍,有效访问和利用网络数据都需要克服重大困难。这就是网络搜索变得至关重要的地方。通过自动化提取和处理非结构化网络内容,可以编译出包含有价值见解和竞争优势的大型数据集。

然而,网络数据爱好者和专业人士在动态的在线环境中会遇到许多挑战。在本文中,我们将探讨初学者和专家都必须了解的五大网络搜索挑战。此外,我们还将深入探讨克服这些困难的最有效解决方案。

让我们深入网络搜索的世界,了解如何克服这些挑战!

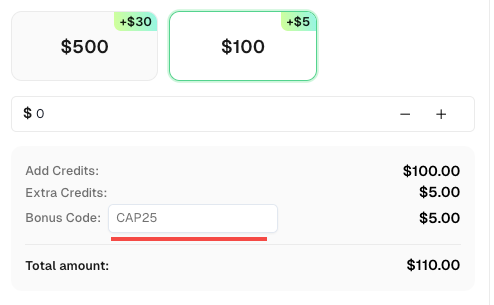

奖励代码

不要错过进一步优化操作的机会!在充值CapSolver账户时使用奖励代码 CAP25,每次充值可额外获得5%的奖励,无上限。访问 CapSolver仪表板 立即兑换您的奖励!

IP封锁

为了防止滥用和未经授权的网络爬虫,网站通常会采用基于IP地址等唯一标识符的封锁措施。当超过某些限制或检测到可疑活动时,网站可能会封禁相关IP地址,从而有效阻止自动化爬虫。

网站还可能实施地理封锁,根据IP地址的地理位置进行封锁,以及分析IP来源和异常使用模式的其他反机器人措施,以识别和封锁IP地址。

解决方案

幸运的是,有几种方法可以克服IP封锁。最简单的方法是调整请求以遵守网站的限制,控制请求频率并保持自然的使用模式。然而,这种方法会显著限制在特定时间范围内可以爬取的数据量。

更可扩展的解决方案是使用包含IP轮换和重试机制的代理服务,以规避IP封锁。需要注意的是,使用代理和其他规避方法进行网络爬虫可能引发伦理问题。请始终确保遵守当地的和国际的数据法规,并在继续之前仔细阅读网站的使用条款(TOS)和政策。

验证码

验证码(CAPTCHA),全称“完全自动化的公共图灵测试来区分计算机和人类”,是一种广泛使用的安全措施,用于阻止网络爬虫访问和提取网站数据。

该系统会呈现需要手动交互的挑战,以在授予对所需内容的访问权限之前证明用户的真实性。这些挑战可以是图像识别、文本谜题、音频谜题,甚至用户行为分析等形式。

解决方案

要克服验证码,可以手动解决它们或采取措施避免触发它们。通常建议选择前者,因为它可以确保数据完整性,提高自动化效率,提供可靠性和稳定性,并符合法律和伦理指南。避免触发验证码可能导致数据不完整、增加人工操作、使用不合规的方法,并面临法律和伦理风险。因此,解决验证码是一种更可靠和可持续的方法。

例如,CapSolver 是一个专门解决验证码的第三方服务。它提供了一个可以直接集成到爬虫脚本或应用程序中的API。

通过将验证码解决外包给像CapSolver这样的服务,您可以简化爬虫流程并减少人工干预。

速率限制

速率限制是网站用来防止滥用和各种攻击的一种方法。它限制了客户端在给定时间范围内可以发出的请求数量。如果超过限制,网站可能会使用IP封锁或验证码等技术来限制或阻止请求。

速率限制主要关注识别单个客户端并监控其使用情况,以确保其保持在设定的限制内。识别可以基于客户端的IP地址,也可以使用浏览器指纹识别等技术,即检测独特的客户端特征。用户代理字符串和cookie也可能作为识别过程的一部分进行检查。

解决方案

有几种方法可以克服速率限制。一种简单的方法是控制请求的频率和时间,以模拟更像人类的行为。这可以包括在请求之间引入随机延迟或重试。其他解决方案包括轮换IP地址并自定义各种属性,如用户代理字符串和浏览器指纹。

蜜罐陷阱

蜜罐陷阱对网络爬虫机器人构成重大挑战,因为它们专门设计用来欺骗自动化脚本。这些陷阱包括仅针对机器人的隐藏元素或链接。

蜜罐陷阱的目的是识别并阻止爬虫活动,因为真实用户不会与这些隐藏元素互动。当爬虫遇到并交互这些陷阱时,会引发警报,可能导致爬虫被网站封禁。

解决方案

要克服这一挑战,必须保持警惕,避免陷入蜜罐陷阱。一种有效策略是识别并避免隐藏链接。这些链接通常配置为CSS属性如 display: none 或 visibility: hidden,使其对人类用户不可见,但可被爬虫检测到。

通过仔细分析您正在爬取的网页的HTML结构和CSS属性,您可以排除或绕过这些隐藏链接。这样可以最大限度地减少触发蜜罐陷阱的风险,并保持爬虫过程的完整性和稳定性。

需要注意的是,在进行网络爬虫活动时,遵守网站政策和使用条款至关重要。请确保您的爬虫活动符合网站所有者设定的伦理和法律指南。

动态内容

除了速率限制和封锁外,网络爬虫还面临检测和处理动态内容的挑战。

现代网站通常大量使用JavaScript来增强交互性并动态渲染用户界面的各个部分、附加内容甚至整个页面。

随着单页应用(SPAs)的普及,JavaScript在渲染网站的几乎所有方面都起着关键作用。此外,其他类型的网络应用也使用JavaScript异步加载内容,允许在不刷新或重新加载页面的情况下实现无限滚动等功能。在这种情况下,仅解析HTML是不够的。

要成功爬取动态内容,需要加载和处理底层的JavaScript代码。然而,在自定义脚本中正确实现这一点可能具有挑战性。这就是为什么许多开发人员更倾向于使用无头浏览器和网络自动化工具,如Playwright、Puppeteer和Selenium。

通过利用这些工具,您可以模拟浏览器环境,执行JavaScript,并获取完全渲染的HTML,包括任何动态加载的内容。这种方法确保您能够捕获所有所需的信息,即使网站严重依赖JavaScript生成内容。

页面加载缓慢

当网站经历大量并发请求时,其加载速度可能会显著受到影响。页面大小、网络延迟、服务器性能以及需要加载的JavaScript和其他资源数量等因素都会导致这一问题。

页面加载缓慢可能导致网络爬虫的数据检索延迟。这会减慢整个爬虫项目,尤其是处理多个页面时。它还可能导致超时、不可预测的爬虫时间、不完整数据提取或如果某些页面元素未能正确加载而出现错误数据。

解决方案

为了解决这一挑战,建议使用无头浏览器如Selenium或Puppeteer。这些工具可以确保在提取数据前页面已完全加载,避免不完整或不准确的信息。设置超时、重试或刷新,并优化您的代码也可以帮助减轻页面加载缓慢的影响。

结论

我们在网络爬虫方面面临诸多挑战。这些挑战包括IP封锁、验证码验证、速率限制、蜜罐陷阱、动态内容和页面加载缓慢。然而,我们可以通过使用代理、解决验证码、控制请求频率、避免陷阱、利用无头浏览器和优化代码来克服这些挑战。通过解决这些障碍,我们可以提高网络爬虫的效率,收集有价值的信息,并确保合规性。

常见问题:关于网络爬虫挑战的常见问题

1. 什么是网络爬虫,为什么它很重要?

网络爬虫是自动从网站上收集和提取数据的过程。它广泛用于市场研究、SEO跟踪、数据分析和机器学习。高效的爬虫有助于企业获得见解并保持竞争优势。

2. 为什么网站会阻止网络爬虫?

网站会阻止爬虫以防止滥用、保护服务器性能和保护私有数据。常见的反机器人方法包括IP封锁、验证码验证和JavaScript指纹识别。

3. 如何在进行网络爬虫时解决验证码?

您可以使用第三方验证码解决服务,如 CapSolver。它提供API来自动解决reCAPTCHA、hCaptcha和其他验证码类型,确保数据收集的连续性。

4. 爬取网站时避免IP封锁的最佳方法是什么?

使用 轮换代理 并控制您的请求频率。短时间内发送太多请求可能会触发速率限制或封禁。强烈建议使用住宅代理和道德爬虫实践。

5. 如何处理动态或JavaScript密集型内容?

现代网站经常使用React或Vue等JavaScript框架,这些框架动态加载内容。像 Puppeteer、Playwright 或 Selenium 这样的工具可以模拟浏览器环境,有效地渲染和爬取完整页面数据。

6. 网络爬虫是否存在法律或伦理问题?

是的。始终遵守网站的 使用条款(ToS) 和数据隐私法规(如GDPR或CCPA)。专注于公开数据,避免爬取敏感或受限制的信息。

7. 如何加快缓慢的网络爬虫项目?

优化您的脚本,设置适当的超时、缓存结果并使用异步请求。此外,使用CapSolver高效解决验证码并使用快速代理可以减少延迟并提高稳定性。

合规声明: 本博客提供的信息仅供参考。CapSolver 致力于遵守所有适用的法律和法规。严禁以非法、欺诈或滥用活动使用 CapSolver 网络,任何此类行为将受到调查。我们的验证码解决方案在确保 100% 合规的同时,帮助解决公共数据爬取过程中的验证码难题。我们鼓励负责任地使用我们的服务。如需更多信息,请访问我们的服务条款和隐私政策。

更多

2026年十大无代码爬虫工具

2026年最佳无代码网络爬虫工具精选列表。比较AI驱动的爬虫、可视化点击平台、定价、优缺点及实际应用案例。

Emma Foster

27-Jan-2026

IP封禁在2026年:它们的工作原理和实用方法

通过我们的全面指南,了解如何在2026年绕过IP封禁。探索现代IP封禁技术及实用解决方案,如住宅代理和CAPTCHA解决工具。

Ethan Collins

26-Jan-2026

如何在 Maxun 中使用 CapSolver 集成解决验证码

将CapSolver与Maxun集成以进行实际网络爬虫的实用指南。学习如何通过预认证和机器人工作流程处理reCAPTCHA、Cloudflare Turnstile和CAPTCHA保护的网站。

Emma Foster

21-Jan-2026

如何在浏览器4中通过CapSolver集成解决验证码

高吞吐量Browser4自动化结合CapSolver用于处理大规模网络数据提取中的CAPTCHA挑战。

Lucas Mitchell

21-Jan-2026

什么是爬虫机器人以及如何构建一个

了解什么是爬虫以及如何构建一个用于自动化数据提取的爬虫。发现顶级工具、安全绕过技术以及道德爬取实践。

Ethan Collins

15-Jan-2026

Scrapy 与 Selenium:哪个更适合您的网络爬虫项目?

了解Scrapy和Selenium在网页爬虫中的优势和差异。学习哪个工具最适合您的项目,以及如何处理像验证码这样的挑战。

Sora Fujimoto

14-Jan-2026