Web Crawler in Python and How to Avoid Getting Blocked When Web Crawling

Ethan Collins

Pattern Recognition Specialist

11-Jun-2024

The internet is a vast repository of information, ranging from news updates to niche data points buried deep within websites. Extracting this data manually is impractical, which is where web crawling shines. Web crawling, also known as web scraping, is the automated process of navigating through websites, extracting data, and storing it for various purposes such as data analysis, market research, and content aggregation.

However, the landscape of web crawling is not without its challenges. Websites deploy sophisticated techniques to detect and block automated crawlers, ranging from simple rate limiting to advanced CAPTCHA challenges. As a web crawler developer, navigating these challenges effectively is key to maintaining reliable data extraction processes.

👌 Table of Contents

- Understanding Web Crawlers

- A Web Crawler in Python

- How to Avoid Getting Blocked When Web Crawling

- Conclusion

Understanding Web Crawler

What Is a Web Crawler?

A web crawler, often likened to a diligent digital explorer, tirelessly traverses the vast expanses of the internet. Its mission? To systematically scour websites, indexing everything in its path. Originally designed for search engines to create lightning-fast search results, these bots have evolved. Now, they power everything from coupon apps to SEO wizards, gathering titles, images, keywords, and links as they go. Beyond indexing, they can scrape content, track webpage changes, and even mine data. Meet the web spider: weaving through the World Wide Web, spinning a digital web of information.

How does Web Crawler work?

Web crawlers operate by systematically navigating through web pages, starting from a predefined set and following hyperlinks to discover new pages. Before initiating a crawl, these bots first consult a site's robots.txt file, which outlines guidelines set by website owners regarding which pages can be crawled and which links can be followed.

Given the vast expanse of the internet, web crawlers prioritize certain pages based on established rules. They may favor pages with numerous external links pointing to them, higher traffic volumes, or greater brand authority. This prioritization strategy is rooted in the assumption that pages with significant traffic and links are more likely to offer authoritative and valuable content sought by users. Algorithms also assist crawlers in assessing the content's relevance and the quality of links found on each page.

During their exploration, web crawlers meticulously record meta tags from each site, which provide essential metadata and keyword information. This data plays a crucial role in how search engines rank and display pages in search results, aiding in users' navigation and information retrieval.

A Web Crawler in Python

A web crawler in Python is an automated script designed to browse the internet methodically, starting from predefined seed URLs. It operates by making HTTP requests to web servers, retrieving HTML content, and then parsing this content using libraries like BeautifulSoup or lxml. These libraries enable the crawler to extract relevant information such as page titles, links, images, and text.

Python's versatility in handling web requests and parsing HTML makes it particularly suitable for developing web crawlers. Crawlers typically adhere to a set of rules defined in a site's robots.txt file, which specifies which parts of the site are open for crawling and which should be excluded. This adherence helps maintain ethical crawling practices and respect site owner preferences.

Beyond indexing pages for search engines, Python web crawlers are used for various purposes including data mining, content aggregation, monitoring website changes, and even automated testing. By following links within pages, crawlers navigate through websites, building a map of interconnected pages that mimic the structure of the web. This process allows them to systematically gather data from a wide range of sources, aiding in tasks such as competitive analysis, market research, and information retrieval.

Building a Python Web Crawler

Before diving into building a web crawler, it's crucial to set up your development environment with the necessary tools and libraries.

Prerequisites

- Python: Install Python 3.x from python.org.

- Requests Library: For making HTTP requests.

- Beautiful Soup: For parsing HTML and XML documents.

- Selenium (optional): For handling JavaScript-rendered content and CAPTCHAs.

bash

pip install requests beautifulsoup4 seleniumBuilding a Simple Web Crawler

Let's create a basic web crawler using Python and demonstrate how to extract links and text from a webpage.

python

import requests

from bs4 import BeautifulSoup

def simple_crawler(url):

# Send HTTP request

response = requests.get(url)

# Check if request was successful

if response.status_code == 200:

# Parse content with BeautifulSoup

soup = BeautifulSoup(response.text, 'html.parser')

# Example: Extract all links from the page

links = soup.find_all('a', href=True)

for link in links:

print(link['href'])

# Example: Extract text from specific elements

headings = soup.find_all(['h1', 'h2', 'h3'])

for heading in headings:

print(heading.text)

else:

print(f"Failed to retrieve content from {url}")

# Example usage

simple_crawler('https://example.com')How to Avoid Getting Blocked When Web Crawling

When you embark on web crawling in Python, navigating around blocks becomes a critical challenge. Numerous websites fortify their defenses with anti-bot measures, designed to detect and thwart automated tools, thereby blocking access to their pages.

To overcome these hurdles, consider implementing the following strategies:

1. Dealing with CAPTCHAs

CAPTCHAs are a common defense mechanism against automated crawlers. They challenge users to prove they are human by completing tasks like identifying objects or entering text. The best strategies to handle CAPTCHAs is by incorporating a reliable CAPTCHA solving service like CapSolver into your web scraping workflow can streamline the process of solving these challenges. CapSolver provides APIs and tools to programmatically solve various types of CAPTCHAs, enabling seamless integration with your Python scripts. A quick short guides below to show:

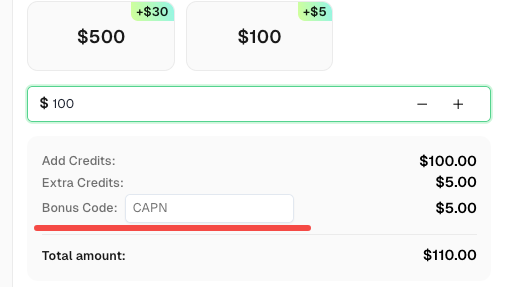

Redeem Your CapSolver Bonus Code

Don’t miss the chance to further optimize your operations! Use the bonus code CAPN when topping up your CapSolver account and receive an extra 5% bonus on each recharge, with no limits. Visit the CapSolver to redeem your bonus now!

How to Solve Any CAPTCHA with Capsolver Using Python:

Prerequisites

- A working proxy

- Python installed

- CapSolver API Key

🤖 Step 1: Install Necessary Packages

Execute the following commands to install the required packages:

pip install capsolver

Here is an example of reCAPTCHA v2:

👨💻 Python Code for solve reCAPTCHA v2 with your proxy

Here's a Python sample script to accomplish the task:

python

import capsolver

# Consider using environment variables for sensitive information

PROXY = "http://username:password@host:port"

capsolver.api_key = "Your Capsolver API Key"

PAGE_URL = "PAGE_URL"

PAGE_KEY = "PAGE_SITE_KEY"

def solve_recaptcha_v2(url,key):

solution = capsolver.solve({

"type": "ReCaptchaV2Task",

"websiteURL": url,

"websiteKey":key,

"proxy": PROXY

})

return solution

def main():

print("Solving reCaptcha v2")

solution = solve_recaptcha_v2(PAGE_URL, PAGE_KEY)

print("Solution: ", solution)

if __name__ == "__main__":

main()👨💻 Python Code for solve reCAPTCHA v2 without proxy

Here's a Python sample script to accomplish the task:

python

import capsolver

# Consider using environment variables for sensitive information

capsolver.api_key = "Your Capsolver API Key"

PAGE_URL = "PAGE_URL"

PAGE_KEY = "PAGE_SITE_KEY"

def solve_recaptcha_v2(url,key):

solution = capsolver.solve({

"type": "ReCaptchaV2TaskProxyless",

"websiteURL": url,

"websiteKey":key,

})

return solution

def main():

print("Solving reCaptcha v2")

solution = solve_recaptcha_v2(PAGE_URL, PAGE_KEY)

print("Solution: ", solution)

if __name__ == "__main__":

main()2. Avoiding IP Bans and Rate Limits

Websites often impose restrictions on the number of requests a crawler can make within a given time frame to prevent overload and abuse.

Strategies to Avoid Detection:

- Rotate IP Addresses: Use proxy servers or VPNs to switch IP addresses and avoid triggering rate limits.

- Respect Robots.txt: Check and adhere to the rules specified in a site's

robots.txtfile to avoid being blocked. - Politeness Policies: Implement delays between requests (crawl delay) to simulate human browsing behavior.

Conclusion

Web crawling empowers businesses and researchers to access vast amounts of data efficiently. However, navigating the challenges of automated detection and blocking requires strategic planning and adherence to ethical standards. By leveraging Python's robust libraries and implementing best practices, developers can build resilient crawlers capable of extracting valuable insights while respecting the boundaries set by websites.

In essence, mastering web crawling involves not only technical expertise but also a keen understanding of web etiquette and legal considerations. With these tools and strategies at your disposal, you can harness the power of web crawling responsibly and effectively in your projects.

Compliance Disclaimer: The information provided on this blog is for informational purposes only. CapSolver is committed to compliance with all applicable laws and regulations. The use of the CapSolver network for illegal, fraudulent, or abusive activities is strictly prohibited and will be investigated. Our captcha-solving solutions enhance user experience while ensuring 100% compliance in helping solve captcha difficulties during public data crawling. We encourage responsible use of our services. For more information, please visit our Terms of Service and Privacy Policy.

More

Top 10 Data Collection Methods for AI and Machine Learning

Discover the 10 best data collection methods for AI and ML, focusing on Throughput, Cost, and Scalability. Learn how CapSolver's AI-powered captcha solving ensures stable data acquisition for your projects.

Sora Fujimoto

22-Dec-2025

What Is CAPTCHA and How to Solve It: Simple Guide for 2026

Tired of frustrating CAPTCHA tests? Learn what CAPTCHA is, why it's essential for web security in 2026, and the best ways to solve it fast. Discover advanced AI-powered CAPTCHA solving tools like CapSolver to bypass challenges seamlessly.

Anh Tuan

05-Dec-2025

Web scraping with Cheerio and Node.js 2026

Web scraping with Cheerio and Node.js in 2026 remains a powerful technique for data extraction. This guide covers setting up the project, using Cheerio's Selector API, writing and running the script, and handling challenges like CAPTCHAs and dynamic pages.

Ethan Collins

20-Nov-2025

Best Captcha Solving Service 2026, Which CAPTCHA Service Is Best?

Compare the best CAPTCHA solving services for 2026. Discover CapSolver's cutting-edge AI advantage in speed, 99%+ accuracy, and compatibility with Captcha Challenge

Lucas Mitchell

30-Oct-2025

Web Scraping vs API: Collect data with web scraping and API

Learn the differences between web scraping and APIs, their pros and cons, and which method is best for collecting structured or unstructured web data efficiently.

Rajinder Singh

29-Oct-2025

Auto-Solving CAPTCHAs with Browser Extensions: A Step-by-Step Guide

Browser extensions have revolutionized the way we interact with websites, and one of their remarkable capabilities is the ability to auto-solve CAPTCHAs..

Ethan Collins

23-Oct-2025