Using WADE Anti-Detect Browser in Data Scraping

Ethan Collins

Pattern Recognition Specialist

29-Aug-2024

The Whoer.net team has developed an incredible anti-detect browser known as the Whoer Anti-Detect Browser, or simply WADE. This innovative tool is designed to keep you safe and efficient while working online. In this article, we will explore how the WADE anti-detect browser works and how it can be effectively combined with the CapSolver service to enhance your data scraping efforts.

The Importance of Anti-Detect Browsers for Data Scraping

Anti-detect browsers play a crucial role in the data scraping process, helping scrapers maintain anonymity, avoid detection, and bypass restrictions set by websites. Here, we’ll discuss the key requirements for browsers used in scraping, the unique features of the WADE Anti-Detect Browser, and how it enhances the web scraping experience.

Requirements for Browsers Used in Data Scraping

-

Anonymity and Privacy: Scrapers need to keep their identity hidden to prevent websites from blocking their access. Anti-detect browser WADE helps mask user fingerprints and IP addresses, providing a layer of privacy.

-

Session Management: An effective scraping process requires managing multiple sessions simultaneously. The browser should allow users to create and switch between different profiles easily.

-

Customization Options: Different websites have different anti-bot measures. A good anti-detect browser should allow customizable settings to mimic various devices and browsers, helping to bypass these restrictions.

-

Speed and Efficiency: Data scraping can be resource-intensive. A WADE browser optimized for speed enables quick data extraction and saves valuable time.

-

Compatibility with Scraping Tools: The browser should seamlessly integrate with various scraping tools and libraries, making the process smoother and more efficient.

How WADE Anti-Detect Browser Helps with Scraping

The WADE Anti-Detect Browser is specifically designed to meet the needs of data scrapers by providing a suite of features that enhance the scraping experience. Here’s how it helps:

-

WADE allows users to create a virtual fingerprint distinct from their real one. This feature is vital for preventing websites from tracking users’ activities, which often leads to price changes or access restrictions.

-

Many websites limit access based on geographical location or other browser parameters. WADE is designed to work seamlessly with proxies, enabling users to modify these settings and access content that may be restricted in standard browsers. This combination ensures that users can effectively bypass geo-restrictions while maintaining their anonymity.

-

WADE is regularly updated to stay ahead of advanced detection systems. Its core updates ensure that users benefit from the latest features and security improvements, while extensive testing on advanced checkers guarantees that the browser performs optimally against stringent anti-bot measures.

-

The browser is designed with a simple, intuitive interface that makes it easy for users to navigate and utilize its powerful features.

7-Day Free Trial: WADE offers a 7-day free trial, allowing potential users to explore its features and see firsthand how it can enhance their data scraping efforts.

How to Use WADE Anti-Detect Browser for Web Scraping

The WADE Anti-Detect Browser is a powerful tool for web scraping that helps ensure your anonymity and bypass restrictions set by websites. Here’s a step-by-step guide on how to use it for data scraping.

Step 1: Download the WADE Anti-Detect Browser

Download WADE: Visit the official website to download the latest version of the WADE Anti-Detect Browser. Follow the instructions to open it on your computer.

Step 2: Set Up User Profiles

-

Create User Profiles: Launch WADE and create multiple user profiles. Each profile will have a unique browser fingerprint, which helps avoid blocks from target sites.

-

Configure Settings: In the settings of each profile, you can modify browser parameters such as screen resolution, language, and other preferences to mimic various devices and locations or can use default settings created by WADE.

Step 3: Set Up Proxies

-

Connect Proxies: WADE supports the use of proxy servers, allowing you to change your IP address. In the browser settings, add your proxy details (IP address, port, username, and password if needed).

-

Test Proxies: After adding proxies, make sure to test their functionality to ensure everything is configured correctly.

Step 4: Prepare for Scraping

-

Identify Your Target Site: Choose the website from which you plan to collect data. Understand its structure and the elements you want to extract.

-

Open Target Site in WADE: Launch the WADE browser, select the desired profile, and navigate to the target website. Ensure that all settings, including proxies, are applied.

Step 5: Start Data Scraping

Depending on your preferences, you can integrate WADE with various scraping tools and scripts.

-

Use Scraping Tools: First, in the WADE Anti-Detect Browser, you can install various browser extensions specifically designed for web scraping. These extensions enable you to collect data directly from the pages you are browsing. For instance, some popular options include Web Scraper, which allows you to create sitemaps for your target websites and extract data in a structured format like CSV or JSON, and Data Miner, which enables you to scrape data from web pages with customizable scraping recipes. With these tools, you can define the elements you want to scrape, such as text, links, and images, and set up pagination to handle multiple pages efficiently. After installation, you can activate the extension while browsing a target website and follow the extension’s interface to set up your scraping criteria and start collecting data.

-

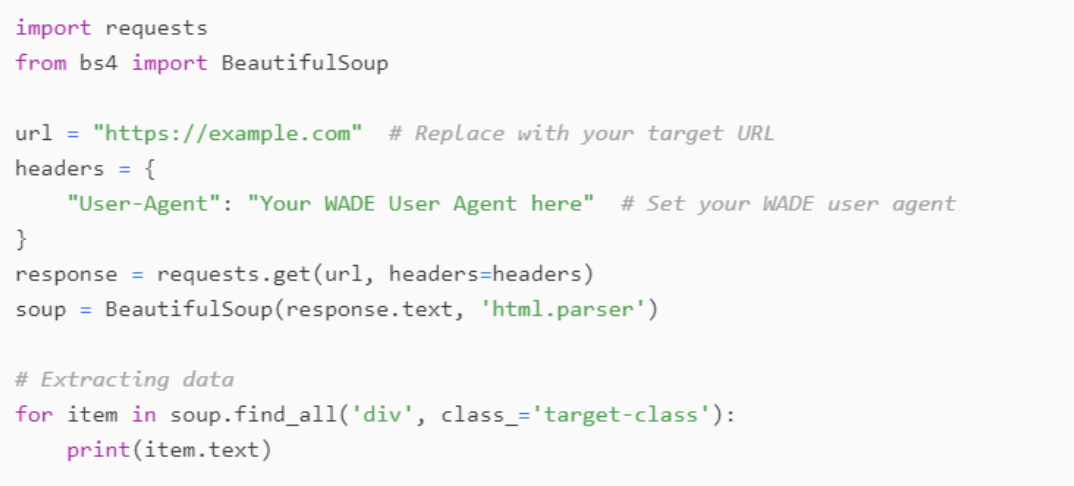

Writing Your Own Scripts: You can create your own scraping scripts using programming languages like Python. Two popular libraries for web scraping are BeautifulSoup and Scrapy.

BeautifulSoup is ideal for parsing HTML and XML documents, making it easy to extract data. It is particularly useful for navigating through the Document Object Model (DOM) and selecting specific elements based on tags, classes, or IDs.

Here is a simple example of how to use BeautifulSoup to extract data:

Scrapy, on the other hand, is a powerful web crawling and scraping framework designed for building complex crawlers. It offers features that allow you to handle requests, parse data, and manage cookies and sessions more efficiently than with basic scripts.

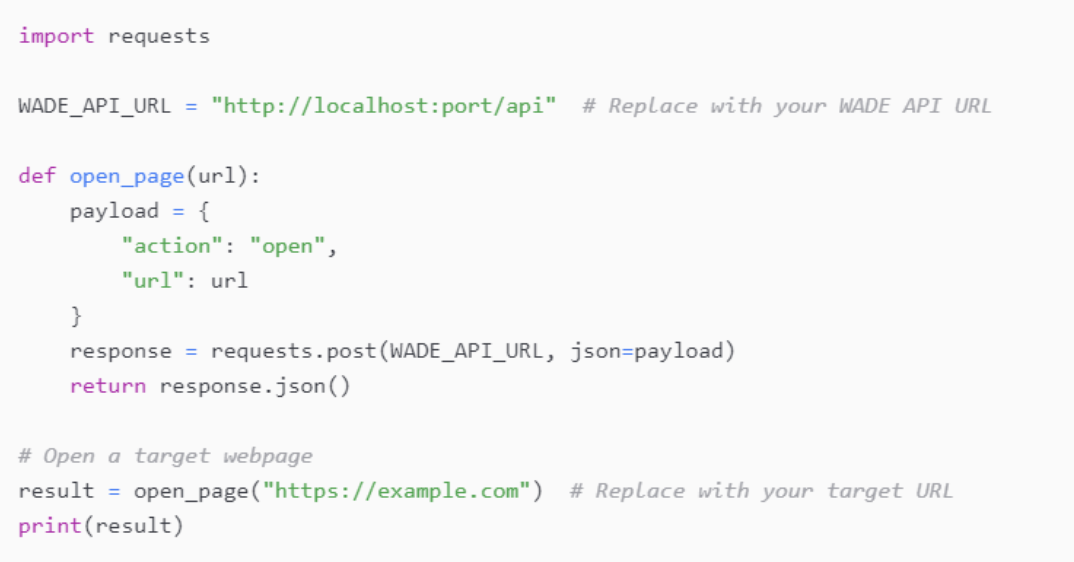

- Using the WADE API: The WADE browser also provides an API that allows developers to interact programmatically with the browser. This feature is beneficial for automating various tasks.

With the WADE API, you can automate page navigation by sending commands to open URLs, click buttons, and fill out forms. You can also interact with specific elements on a page, extract text, click links, or submit forms without needing to manually interact with the browser.

Additionally, managing multiple sessions is straightforward; you can create new profiles via the API, which facilitates running concurrent scraping tasks.

Here is an example of how to use the WADE API to open a page:

With both your own scripts and the WADE API, you can create a powerful scraping setup that allows for flexibility and efficiency.

- Combining the Tools

You can effectively combine the WADE Anti-Detect Browser with CapSolver, browser extensions, and scripts for a more robust scraping solution. For instance, when scraping websites that utilize CAPTCHAs, CapSolver can automate the CAPTCHA solving process, allowing you to bypass these obstacles seamlessly.

Step 6: Save and Process Data

-

Save Collected Data: After scraping, it’s important to save the data in an accessible format, such as CSV or JSON. CSV files are great for tabular data and easy to analyze with tools like Excel. JSON is useful for structured data and can integrate smoothly with many applications. For larger datasets, consider using a database to manage and retrieve your data efficiently.

-

Check and Analyze: Once your data is saved, review it for any errors or inconsistencies. Validate that all necessary fields are present and check for duplicates. Cleaning the data may involve correcting formats and standardizing entries. After validation, analyze the data to extract insights, using statistical methods or visualization tools as needed.

Conclusion

The WADE Anti-Detect Browser offers valuable features for anyone involved in web scraping. Its focus on privacy, with capabilities like virtual fingerprinting and seamless proxy integration, helps users navigate the challenges of accessing restricted content while minimizing the risk of detection.

With an intuitive interface, WADE makes it easy to configure settings and manage multiple user profiles, catering to both novice and experienced scrapers. By incorporating WADE into your data collection strategy, you can enhance your efficiency and effectiveness, ensuring that you gather the insights you need while maintaining your online anonymity. Whether for research, market analysis, or competitive intelligence, WADE is a reliable tool to support your web scraping endeavors.

Compliance Disclaimer: The information provided on this blog is for informational purposes only. CapSolver is committed to compliance with all applicable laws and regulations. The use of the CapSolver network for illegal, fraudulent, or abusive activities is strictly prohibited and will be investigated. Our captcha-solving solutions enhance user experience while ensuring 100% compliance in helping solve captcha difficulties during public data crawling. We encourage responsible use of our services. For more information, please visit our Terms of Service and Privacy Policy.

More

How to Integrate LunaProxy With CapSolver

A concise guide on how to integrate LunaProxy with CapSolver, detailing account setup and configuration for both browser extensions and API workflows.

Lucas Mitchell

27-Nov-2025

How to Solve Captcha in Crawl4AI with CapSolver Integration

Seamless web scraping with Crawl4AI & CapSolver: Automated CAPTCHA solution, enhanced efficiency, and robust data extraction for AI.

Lucas Mitchell

26-Sep-2025

How to Solve CAPTCHA in Automa RPA Using CapSolver

Solve CAPTCHAs easily in Automa RPA with CapSolver — seamless integration, high accuracy, and no-code automation support.

Lucas Mitchell

29-Aug-2025

Are Prompt-Based Scrapers the Best for You?

AI-powered web scraping tools for scalable, adaptive, and automated data extraction workflows

Lucas Mitchell

17-Jul-2025

FlashProxy: Powering the Internet with Advanced Proxy Solutions

FlashProxy is one of the well-established proxy service providers, offering a wide suite of proxy solutions to fit the different online requirements.

Ethan Collins

11-Oct-2024

The best Antidetect Browser for online anonymity and multi-account management.

The best Antidetect Browser for online anonymity and multi-account management.

Ethan Collins

08-Oct-2024