Web Scraping with Botright and Python in 2025

Lucas Mitchell

Automation Engineer

14-Nov-2024

Introduction to BotRight

BotRight is an advanced Python library for web automation, designed specifically to navigate the increasing complexities of bot detection systems across websites. Unlike basic automation tools, BotRight goes beyond simple interactions by providing nuanced controls that make automated browsing appear highly human-like. This emphasis on human behavior simulation is critical for accessing websites that would typically block or limit bots.

Built on top of Selenium WebDriver, BotRight offers a high-level API that abstracts complex browser interactions into simple commands, allowing both beginners and advanced users to develop sophisticated scrapers and automation scripts without the need to manage low-level browser commands. This makes it an excellent choice for projects ranging from simple data collection to complex, multi-step web tasks that demand resilience against bot-detection algorithms.

Why Choose BotRight?

BotRight provides several features that make it stand out in the automation landscape:

-

Human-Like Interactions: BotRight’s design centers on simulating real user actions, such as smooth mouse movements, natural typing patterns, and timing delays. These behaviors reduce the risk of detection and provide more reliable access to content that is typically restricted to genuine users.

-

Browser State Persistence: By supporting browser profiles, BotRight lets you maintain the session state across multiple automation runs. This feature is particularly useful for tasks that require login persistence or where specific cookies and cache states must be preserved.

-

Ease of Use: Despite its advanced capabilities, BotRight is remarkably user-friendly. Its API is structured to streamline complex automation tasks, removing much of the technical overhead that usually comes with Selenium setups. Beginners can get started quickly, while experts can leverage BotRight’s flexibility to build highly customized solutions.

-

Scalability for Complex Workflows: BotRight adapts well to more advanced tasks, including handling AJAX-powered sites, managing paginated data extraction, solving CAPTCHAs, and more. Paired with CAPTCHA solvers like CapSolver, BotRight can handle workflows that require CAPTCHA bypassing, allowing you to automate even heavily guarded websites.

-

Integrated Extensions and Plugins: BotRight supports the inclusion of various extensions and plugins to enhance automation capabilities. For instance, using tools like CapSolver within BotRight helps manage CAPTCHA challenges, unlocking a wider range of websites for scraping or automation.

Setting Up Botright

Before we begin, ensure you have Python 3.7 or higher installed on your system. Follow these steps to set up Botright:

-

Install Botright:

bashpip install botright -

Install the WebDriver Manager:

Botright relies on the

webdriver_managerpackage to manage browser drivers.bashpip install webdriver-manager -

Verify the Installation:

Create a new Python file and import Botright to ensure it's installed correctly.

pythonfrom botright import BotrightIf no errors occur, Botright is installed correctly.

Creating Basic Scrapers

Let's create simple scripts to scrape data from quotes.toscrape.com using Botright.

Scraping Quotes

Script: scrape_quotes.py

python

from botright import Botright

def scrape_quotes():

with Botright() as bot:

bot.get("https://quotes.toscrape.com/")

quotes = bot.find_elements_by_css_selector("div.quote")

for quote in quotes:

text = quote.find_element_by_css_selector("span.text").text

author = quote.find_element_by_css_selector("small.author").text

print(f"\"{text}\" - {author}")

if __name__ == "__main__":

scrape_quotes()Run the script:

bash

python scrape_quotes.pyOutput:

“The world as we have created it is a process of our thinking. It cannot be changed without changing our thinking.” - Albert Einstein

...Explanation:

- We use

Botrightas a context manager to ensure proper setup and teardown. - We navigate to the website using

bot.get(). - We find all quote elements and extract the text and author.

Handling Pagination

Script: scrape_quotes_pagination.py

python

from botright import Botright

def scrape_all_quotes():

with Botright() as bot:

bot.get("https://quotes.toscrape.com/")

while True:

quotes = bot.find_elements_by_css_selector("div.quote")

for quote in quotes:

text = quote.find_element_by_css_selector("span.text").text

author = quote.find_element_by_css_selector("small.author").text

print(f"\"{text}\" - {author}")

# Check if there is a next page

next_button = bot.find_elements_by_css_selector('li.next > a')

if next_button:

next_button[0].click()

else:

break

if __name__ == "__main__":

scrape_all_quotes()Explanation:

- We loop through pages by checking if the "Next" button is available.

- We use

find_elements_by_css_selectorto locate elements. - We click the "Next" button to navigate to the next page.

Scraping Dynamic Content

Script: scrape_dynamic_content.py

python

from botright import Botright

import time

def scrape_tags():

with Botright() as bot:

bot.get("https://quotes.toscrape.com/")

# Click on the 'Top Ten tags' link to load tags dynamically

bot.click('a[href="/tag/"]')

# Wait for the dynamic content to load

time.sleep(2)

tags = bot.find_elements_by_css_selector("span.tag-item > a")

for tag in tags:

tag_name = tag.text

print(f"Tag: {tag_name}")

if __name__ == "__main__":

scrape_tags()Explanation:

- We navigate to the tags page by clicking the link.

- We wait for the dynamic content to load using

time.sleep(). - We extract and print the tags.

Submitting Forms and Logging In

Script: scrape_with_login.py

python

from botright import Botright

def login_and_scrape():

with Botright() as bot:

bot.get("https://quotes.toscrape.com/login")

# Fill in the login form

bot.type('input#username', 'testuser')

bot.type('input#password', 'testpass')

bot.click("input[type='submit']")

# Verify login by checking for a logout link

if bot.find_elements_by_css_selector('a[href="/logout"]'):

print("Logged in successfully!")

# Now scrape the quotes

bot.get("https://quotes.toscrape.com/")

quotes = bot.find_elements_by_css_selector("div.quote")

for quote in quotes:

text = quote.find_element_by_css_selector("span.text").text

author = quote.find_element_by_css_selector("small.author").text

print(f"\"{text}\" - {author}")

else:

print("Login failed.")

if __name__ == "__main__":

login_and_scrape()Explanation:

- We navigate to the login page and fill in the credentials.

- We verify the login by checking for the presence of a logout link.

- Then we proceed to scrape the content available to logged-in users.

Note: Since quotes.toscrape.com allows any username and password for demonstration, we can use dummy credentials.

Integrating CapSolver into Botright

While quotes.toscrape.com does not have CAPTCHAs, many real-world websites do. To prepare for such cases, we'll demonstrate how to integrate CapSolver into our Botright script using the CapSolver browser extension.

Downloading the CapSolver Extension

-

Download the Extension:

- Visit the CapSolver GitHub Releases page.

- Download the latest version, e.g.,

capsolver-chrome-extension-v0.2.3.zip. - Unzip it into a directory at the root of your project, e.g.,

./capsolver_extension.

Configuring the CapSolver Extension

-

Locate

config.json:- Path:

capsolver_extension/assets/config.json

- Path:

-

Edit

config.json:json{ "apiKey": "YOUR_CAPSOLVER_API_KEY", "enabledForcaptcha": true, "captchaMode": "token", "enabledForRecaptchaV2": true, "reCaptchaV2Mode": "token", "solveInvisibleRecaptcha": true, "verbose": false }- Replace

"YOUR_CAPSOLVER_API_KEY"with your actual CapSolver API key. - Set

enabledForcaptchaand/orenabledForRecaptchaV2totruebased on the CAPTCHA types you expect. - Set the mode to

"token"for automatic solving.

- Replace

Loading the CapSolver Extension in Botright

To use the CapSolver extension in Botright, we need to configure the browser to load the extension when it starts.

Note: Botright allows you to customize browser options, including adding extensions.

Modified Script:

python

from botright import Botright

from selenium.webdriver.chrome.options import Options

import os

def create_bot_with_capsolver():

# Path to the CapSolver extension folder

extension_path = os.path.abspath('capsolver_extension')

# Configure Chrome options

options = Options()

options.add_argument(f"--load-extension={extension_path}")

options.add_argument("--disable-gpu")

options.add_argument("--no-sandbox")

# Initialize Botright with custom options

bot = Botright(options=options)

return botExplanation:

- Import

Options:- From

selenium.webdriver.chrome.options, to set Chrome options.

- From

- Configure Chrome Options:

- Use

options.add_argument()to add the CapSolver extension.

- Use

- Initialize Botright with Options:

- Pass the

optionstoBotrightwhen creating an instance.

- Pass the

Example Script with CapSolver Integration

We'll demonstrate the integration by navigating to a site with a reCAPTCHA, such as Google's reCAPTCHA demo.

Script: scrape_with_capsolver_extension.py

python

from botright import Botright

from selenium.webdriver.chrome.options import Options

import os

import time

def solve_captcha_and_scrape():

# Path to the CapSolver extension folder

extension_path = os.path.abspath('capsolver_extension')

# Configure Chrome options

options = Options()

options.add_argument(f"--load-extension={extension_path}")

options.add_argument("--disable-gpu")

options.add_argument("--no-sandbox")

# Initialize Botright with custom options

with Botright(options=options) as bot:

bot.get("https://www.google.com/recaptcha/api2/demo")

# Wait for the CAPTCHA to be solved by CapSolver

print("Waiting for CAPTCHA to be solved...")

# Adjust sleep time based on average solving time

time.sleep(15)

# Verify if CAPTCHA is solved by checking the page content

if "Verification Success" in bot.page_source:

print("CAPTCHA solved successfully!")

else:

print("CAPTCHA not solved yet or failed.")

if __name__ == "__main__":

solve_captcha_and_scrape()Explanation:

- Set Up Chrome Options:

- Include the CapSolver extension in the browser session.

- Initialize Botright with Options:

- Pass the

optionswhen creating theBotrightinstance.

- Pass the

- Navigate to the Target Site:

- Use

bot.get()to navigate to the site with a reCAPTCHA.

- Use

- Wait for CAPTCHA to be Solved:

- The CapSolver extension will automatically solve the CAPTCHA.

- Use

time.sleep()to wait; adjust the time as needed.

- Verify CAPTCHA Solution:

- Check the page content to confirm if the CAPTCHA was solved.

Important Notes:

- Extension Path:

- Ensure

extension_pathcorrectly points to your CapSolver extension folder.

- Ensure

- Wait Time:

- The solving time may vary; adjust

time.sleep()accordingly.

- The solving time may vary; adjust

- Driver Management:

- Botright manages the WebDriver internally; passing

optionscustomizes the driver.

- Botright manages the WebDriver internally; passing

- Compliance:

- Ensure that you comply with the terms of service of the website you are scraping.

Running the Script:

bash

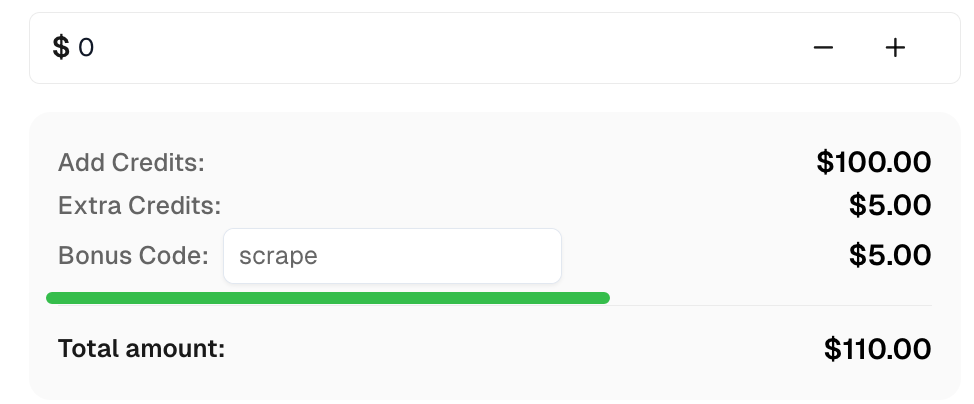

python scrape_with_capsolver_extension.pyBonus Code

Claim your Bonus Code for top captcha solutions at CapSolver: scrape. After redeeming it, you will get an extra 5% bonus after each recharge, unlimited times.

Conclusion

By integrating CapSolver with Botright using the CapSolver browser extension, you can automate CAPTCHA solving in your web scraping projects. This ensures uninterrupted data extraction, even from sites protected by CAPTCHAs.

Key Takeaways:

- Botright simplifies web automation with human-like interactions.

- The CapSolver browser extension can be integrated into Botright scripts.

- Proper configuration of the extension and browser options is crucial.

Compliance Disclaimer: The information provided on this blog is for informational purposes only. CapSolver is committed to compliance with all applicable laws and regulations. The use of the CapSolver network for illegal, fraudulent, or abusive activities is strictly prohibited and will be investigated. Our captcha-solving solutions enhance user experience while ensuring 100% compliance in helping solve captcha difficulties during public data crawling. We encourage responsible use of our services. For more information, please visit our Terms of Service and Privacy Policy.

More

How to Solve CAPTCHAs in Python Using Botasaurus and CapSolver (Full Guide)

Learn to integrate Botasaurus (Python web scraping framework) with CapSolver API to automatically solve reCAPTCHA v2/v3 and Turnstile.

Lucas Mitchell

12-Dec-2025

What are 402, 403, 404, and 429 Errors in Web Scraping? A Comprehensive Guide

Master web scraping error handling by understanding what are 402, 403, 404, and 429 errors. Learn how to fix 403 Forbidden, implement rate limiting error 429 solutions, and handle the emerging 402 Payment Required status code.

Sora Fujimoto

11-Dec-2025

Best Web Scraping APIs in 2026: Top Tools Compared & Ranked

Discover the best Web Scraping APIs for 2026. We compare the top tools based on success rate, speed, AI features, and pricing to help you choose the right solution for your data extraction needs.

Ethan Collins

11-Dec-2025

CapSolver Extension: Effortlessly Solve Image Captcha and ImageToText Challenges in Your Browser

Use the CapSolver Chrome Extension for AI-powered, one-click solving of Image Captcha and ImageToText challenges directly in your browser.

Lucas Mitchell

11-Dec-2025

Cloudflare Challenge vs Turnstile: Key Differences and How to Identify Them

nderstand the key differences between Cloudflare Challenge vs Turnstile and learn how to identify them for successful web automation. Get expert tips and a recommended solver.

Lucas Mitchell

10-Dec-2025

How to Solve AWS Captcha / Challenge with PHP: A Comprehensive Guide

A detailed PHP guide to solving AWS WAF CAPTCHA and Challenge for reliable scraping and automation

Rajinder Singh

10-Dec-2025