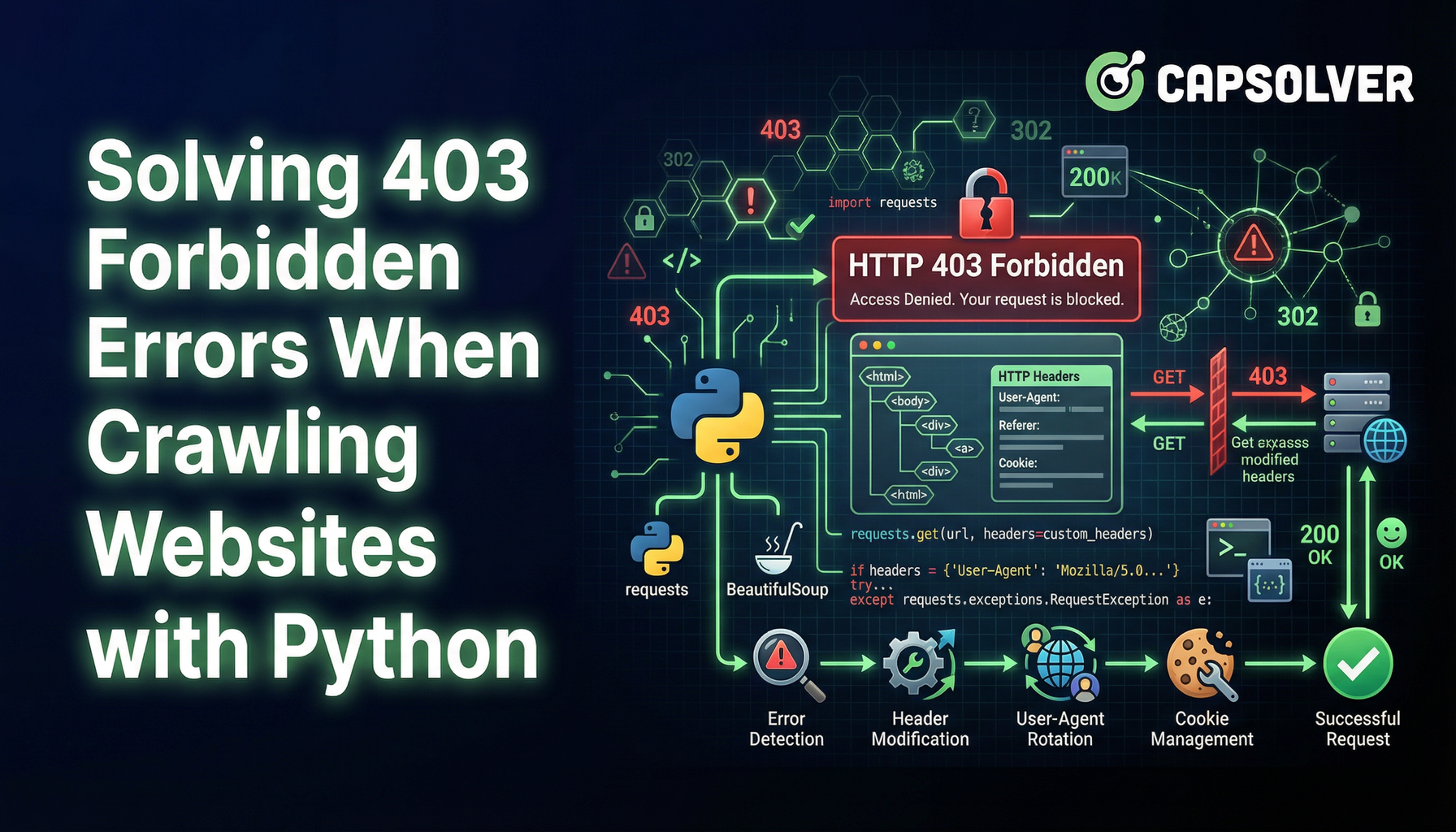

Solving 403 Forbidden Errors When Crawling Websites with Python

Sora Fujimoto

AI Solutions Architect

01-Aug-2024

Web scraping is an invaluable technique for extracting data from websites. However, encountering a 403 Forbidden error can be a major roadblock. This error signifies that the server understands your request, but it refuses to authorize it. Here’s how to navigate around this issue and continue your web scraping journey.

Understanding the 403 Forbidden Error

A 403 Forbidden error occurs when a server denies access to the requested resource. This can happen for several reasons, including:

Struggling with the repeated failure to completely solve the irritating captcha? Discover seamless automatic captcha solving with CapSolver AI-powered Auto Web Unblock technology!

Redeem Your CapSolver Bonus Code

Boost your automation budget instantly!

Use bonus code CAPN when topping up your CapSolver account to get an extra 5% bonus on every recharge — with no limits.

Redeem it now in your CapSolver Dashboard

.

- IP Blocking: Servers may block IP addresses if they detect unusual or high-volume requests.

- User-Agent Restrictions: Some websites restrict access based on the User-Agent string, which identifies the browser and device making the request.

- Authentication Required: Accessing certain pages may require login credentials or an API key.

Techniques to Bypass 403 Errors

1. Rotate IP Addresses

Servers often block requests from the same IP address after a certain threshold. Using a pool of proxy servers to rotate IP addresses can help you avoid this. Services like Nstproxy or Bright Data offer rotating proxy solutions.

python

import requests

proxies = {

"http": "http://your_proxy_here",

"https": "http://your_proxy_here",

}

response = requests.get("http://example.com", proxies=proxies)2. Use a Realistic User-Agent

Web servers can detect and block requests with suspicious User-Agent strings. Spoofing a User-Agent string to mimic a regular browser can help avoid detection.

python

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3"

}

response = requests.get("http://example.com", headers=headers)3. Implement Request Throttling

Sending too many requests in a short period can trigger rate limiting. Introducing delays between requests can help you stay under the radar.

python

import time

for url in url_list:

response = requests.get(url)

time.sleep(5) # Delay for 5 seconds4. Handle Authentication

Some resources require authentication. Using cookies or API keys for authenticated requests can bypass access restrictions.

python

cookies = {

"session": "your_session_cookie_here"

}

response = requests.get("http://example.com", cookies=cookies)5. Leverage Headless Browsers

Headless browsers like Puppeteer or Selenium simulate real user interactions and can help bypass sophisticated anti-scraping measures.

python

from selenium import webdriver

options = webdriver.ChromeOptions()

options.add_argument('headless')

driver = webdriver.Chrome(options=options)

driver.get("http://example.com")

html = driver.page_source6. Overcome CAPTCHA Challenges

In addition to 403 errors, web scrapers often encounter CAPTCHAs, which are designed to block automated access. CAPTCHAs like reCAPTCHA require solving visual or interactive challenges to prove that the request is made by a human.

To solve these challenges, you can use services like CapSolver that provide automated CAPTCHA-solving solutions. CapSolver supports a variety of CAPTCHA types and offers easy integration with web scraping tools.

Conclusion

Encountering a 403 Forbidden error while web scraping can be frustrating, but understanding the reasons behind it and employing the right techniques can help you overcome this hurdle. Whether it's rotating IPs, using realistic User-Agent strings, throttling requests, handling authentication, or leveraging headless browsers, these methods can help you continue extracting valuable data from the web.

By following these strategies, you can effectively manage and circumvent 403 errors, ensuring smoother and more successful web scraping efforts.

FAQs

1. Is bypassing a 403 Forbidden error legal in web scraping?

Bypassing a 403 error is not inherently illegal, but legality depends on the website’s terms of service, robots.txt rules, and local laws. Scraping publicly accessible data for legitimate purposes is often allowed, while bypassing authentication, paywalls, or explicit restrictions may violate terms or regulations. Always review the target site’s policies before scraping.

2. Why do I still get a 403 error even when using proxies and a User-Agent?

A 403 error can persist if the website uses advanced bot-detection systems such as behavioral analysis, fingerprinting, or CAPTCHA challenges. In such cases, simple IP rotation and User-Agent spoofing may not be sufficient. Combining request throttling, session management, headless browsers, and CAPTCHA-solving services like CapSolver can significantly improve success rates.

3. What is the most reliable method to avoid 403 errors for large-scale scraping?

For large-scale scraping, the most reliable approach is a layered strategy: high-quality rotating residential or mobile proxies, realistic browser fingerprints, controlled request rates, proper authentication handling, and automated CAPTCHA solving. Using headless browsers with AI-based web unblock solutions helps closely mimic real user behavior and reduces the likelihood of repeated 403 blocks.

Compliance Disclaimer: The information provided on this blog is for informational purposes only. CapSolver is committed to compliance with all applicable laws and regulations. The use of the CapSolver network for illegal, fraudulent, or abusive activities is strictly prohibited and will be investigated. Our captcha-solving solutions enhance user experience while ensuring 100% compliance in helping solve captcha difficulties during public data crawling. We encourage responsible use of our services. For more information, please visit our Terms of Service and Privacy Policy.

More

Mastering CAPTCHA Challenges in Job Data Scraping (2026 Guide)

A comprehensive guide to understanding and overcoming the CAPTCHA challenge in job data scraping. Learn to handle reCAPTCHA and other hurdles with our expert tips and code examples.

Sora Fujimoto

27-Feb-2026

Top 10 Data Collection Methods for AI and Machine Learning

Discover the 10 best data collection methods for AI and ML, focusing on Throughput, Cost, and Scalability. Learn how CapSolver's AI-powered captcha solving ensures stable data acquisition for your projects.

Sora Fujimoto

22-Dec-2025

What Is CAPTCHA and How to Solve It: Simple Guide for 2026

Tired of frustrating CAPTCHA tests? Learn what CAPTCHA is, why it's essential for web security in 2026, and the best ways to solve it fast. Discover advanced AI-powered CAPTCHA solving tools like CapSolver to bypass challenges seamlessly.

Anh Tuan

05-Dec-2025

Web scraping with Cheerio and Node.js 2026

Web scraping with Cheerio and Node.js in 2026 remains a powerful technique for data extraction. This guide covers setting up the project, using Cheerio's Selector API, writing and running the script, and handling challenges like CAPTCHAs and dynamic pages.

Ethan Collins

20-Nov-2025

Best Captcha Solving Service 2026, Which CAPTCHA Service Is Best?

Compare the best CAPTCHA solving services for 2026. Discover CapSolver's cutting-edge AI advantage in speed, 99%+ accuracy, and compatibility with Captcha Challenge

Lucas Mitchell

30-Oct-2025

Web Scraping vs API: Collect data with web scraping and API

Learn the differences between web scraping and APIs, their pros and cons, and which method is best for collecting structured or unstructured web data efficiently.

Rajinder Singh

29-Oct-2025