How to Use node-fetch for Web Scraping

Lucas Mitchell

Automation Engineer

27-Sep-2024

What is node-fetch?

node-fetch is a lightweight JavaScript library that brings the window.fetch API to Node.js. It is often used for making HTTP requests from a Node.js environment, providing a modern and flexible way to handle network operations asynchronously.

Features:

- Promise-based: Uses JavaScript promises to manage asynchronous operations in a simple manner.

- Node.js Support: Specifically designed for Node.js environments.

- Stream Support: Supports streams, making it highly suitable for handling large data.

- Small and Efficient: Minimalistic design, focusing on performance and compatibility with modern JavaScript features.

Prerequisites

Before using node-fetch, ensure you have:

Installation

To use node-fetch, you need to install it using npm or yarn:

bash

npm install node-fetchor

bash

yarn add node-fetchBasic Example: Making a GET Request

Here’s how to perform a simple GET request using node-fetch:

javascript

const fetch = require('node-fetch');

fetch('https://httpbin.org/get')

.then(response => response.json())

.then(data => {

console.log('Response Body:', data);

})

.catch(error => {

console.error('Error:', error);

});Web Scraping Example: Fetching JSON Data from an API

Let’s fetch data from an API and log the results:

javascript

const fetch = require('node-fetch');

fetch('https://jsonplaceholder.typicode.com/posts')

.then(response => response.json())

.then(posts => {

posts.forEach(post => {

console.log(`${post.title} — ${post.body}`);

});

})

.catch(error => {

console.error('Error:', error);

});Handling Captchas with CapSolver and node-fetch

In this section, we’ll integrate CapSolver with node-fetch to handle captchas. CapSolver provides APIs for solving captchas like ReCaptcha V3, enabling automation of tasks that require solving such captchas.

Example: Solving ReCaptcha V3 with CapSolver and node-fetch

First, install node-fetch and CapSolver:

bash

npm install node-fetch

npm install capsolverNow, here’s how to solve a ReCaptcha V3 and use the solution in your request:

javascript

const fetch = require('node-fetch');

const CAPSOLVER_KEY = 'YourKey';

const PAGE_URL = 'https://antcpt.com/score_detector';

const PAGE_KEY = '6LcR_okUAAAAAPYrPe-HK_0RULO1aZM15ENyM-Mf';

const PAGE_ACTION = 'homepage';

async function createTask(url, key, pageAction) {

try {

const apiUrl = 'https://api.capsolver.com/createTask';

const payload = {

clientKey: CAPSOLVER_KEY,

task: {

type: 'ReCaptchaV3TaskProxyLess',

websiteURL: url,

websiteKey: key,

pageAction: pageAction

}

};

const response = await fetch(apiUrl, {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify(payload)

});

const data = await response.json();

return data.taskId;

} catch (error) {

console.error('Error creating CAPTCHA task:', error);

throw error;

}

}

async function getTaskResult(taskId) {

try {

const apiUrl = 'https://api.capsolver.com/getTaskResult';

const payload = {

clientKey: CAPSOLVER_KEY,

taskId: taskId,

};

let result;

do {

const response = await fetch(apiUrl, {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify(payload)

});

result = await response.json();

if (result.status === 'ready') {

return result.solution;

}

await new Promise(resolve => setTimeout(resolve, 5000)); // wait 5 seconds

} while (true);

} catch (error) {

console.error('Error fetching CAPTCHA result:', error);

throw error;

}

}

async function main() {

console.log('Creating CAPTCHA task...');

const taskId = await createTask(PAGE_URL, PAGE_KEY, PAGE_ACTION);

console.log(`Task ID: ${taskId}`);

console.log('Retrieving CAPTCHA result...');

const solution = await getTaskResult(taskId);

const token = solution.gRecaptchaResponse;

console.log(`Token Solution: ${token}`);

const res = await fetch('https://antcpt.com/score_detector/verify.php', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ 'g-recaptcha-response': token })

});

const response = await res.json();

console.log(`Score: ${response.score}`);

}

main().catch(err => {

console.error(err);

});Handling Proxies with node-fetch

To route your requests through a proxy with node-fetch, you will need a proxy agent like https-proxy-agent. Here's how to implement it:

bash

npm install https-proxy-agentExample with a proxy:

javascript

const fetch = require('node-fetch');

const HttpsProxyAgent = require('https-proxy-agent');

const proxyAgent = new HttpsProxyAgent('http://username:password@proxyserver:8080');

fetch('https://httpbin.org/ip', { agent: proxyAgent })

.then(response => response.json())

.then(data => {

console.log('Response Body:', data);

})

.catch(error => {

console.error('Error:', error);

});Handling Cookies with node-fetch

For cookie handling in node-fetch, you can use a library like fetch-cookie. Here's how to use it:

bash

npm install fetch-cookieExample:

javascript

const fetch = require('node-fetch');

const fetchCookie = require('fetch-cookie');

const cookieFetch = fetchCookie(fetch);

cookieFetch('https://httpbin.org/cookies/set?name=value')

.then(response => response.json())

.then(data => {

console.log('Cookies:', data);

})

.catch(error => {

console.error('Error:', error);

});Advanced Usage: Custom Headers and POST Requests

You can customize headers and perform POST requests with node-fetch:

javascript

const fetch = require('node-fetch');

const headers = {

'User-Agent': 'Mozilla/5.0 (compatible)',

'Accept-Language': 'en-US,en;q=0.5',

};

const data = {

username: 'testuser',

password: 'testpass',

};

fetch('https://httpbin.org/post', {

method: 'POST',

headers: headers,

body: JSON.stringify(data),

})

.then(response => response.json())

.then(data => {

console.log('Response JSON:', data);

})

.catch(error => {

console.error('Error:', error);

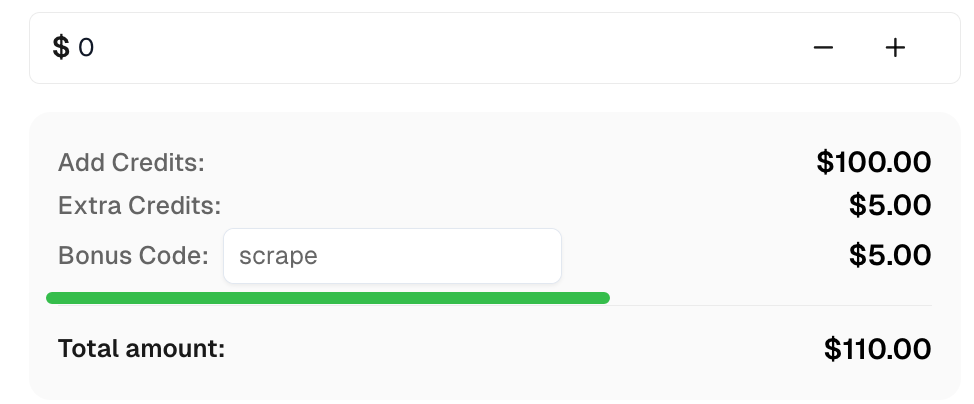

});Bonus Code

Claim your Bonus Code for top captcha solutions at CapSolver: scrape. After redeeming it, you will get an extra 5% bonus after each recharge, unlimited times.

Conclusion

With node-fetch, you can effectively manage HTTP requests in Node.js. By integrating it with CapSolver, you can solve captchas such as ReCaptcha V3 and captcha, providing access to restricted content. Additionally, node-fetch offers customization through headers, proxy support, and cookie management, making it a versatile tool for web scraping and automation.

Compliance Disclaimer: The information provided on this blog is for informational purposes only. CapSolver is committed to compliance with all applicable laws and regulations. The use of the CapSolver network for illegal, fraudulent, or abusive activities is strictly prohibited and will be investigated. Our captcha-solving solutions enhance user experience while ensuring 100% compliance in helping solve captcha difficulties during public data crawling. We encourage responsible use of our services. For more information, please visit our Terms of Service and Privacy Policy.

More

How to Solve CAPTCHAs in Python Using Botasaurus and CapSolver (Full Guide)

Learn to integrate Botasaurus (Python web scraping framework) with CapSolver API to automatically solve reCAPTCHA v2/v3 and Turnstile.

Lucas Mitchell

12-Dec-2025

What are 402, 403, 404, and 429 Errors in Web Scraping? A Comprehensive Guide

Master web scraping error handling by understanding what are 402, 403, 404, and 429 errors. Learn how to fix 403 Forbidden, implement rate limiting error 429 solutions, and handle the emerging 402 Payment Required status code.

Sora Fujimoto

11-Dec-2025

Best Web Scraping APIs in 2026: Top Tools Compared & Ranked

Discover the best Web Scraping APIs for 2026. We compare the top tools based on success rate, speed, AI features, and pricing to help you choose the right solution for your data extraction needs.

Ethan Collins

11-Dec-2025

CapSolver Extension: Effortlessly Solve Image Captcha and ImageToText Challenges in Your Browser

Use the CapSolver Chrome Extension for AI-powered, one-click solving of Image Captcha and ImageToText challenges directly in your browser.

Lucas Mitchell

11-Dec-2025

Cloudflare Challenge vs Turnstile: Key Differences and How to Identify Them

nderstand the key differences between Cloudflare Challenge vs Turnstile and learn how to identify them for successful web automation. Get expert tips and a recommended solver.

Lucas Mitchell

10-Dec-2025

How to Solve AWS Captcha / Challenge with PHP: A Comprehensive Guide

A detailed PHP guide to solving AWS WAF CAPTCHA and Challenge for reliable scraping and automation

Rajinder Singh

10-Dec-2025