Changing User Agents for Web Scraping with Go Colly

Lucas Mitchell

Automation Engineer

27-Sep-2024

When you're working on web scraping projects, changing the User-Agent string is one of the most effective ways to prevent your scraper from getting blocked or flagged as a bot. Web servers often use the User-Agent string to identify the type of client (e.g., browser, bot, or scraper) accessing their resources. If your scraper sends the same User-Agent on each request, you run the risk of being detected and potentially blocked. In this article, we'll explore how to change the User-Agent in Go Colly, a popular web scraping framework in Go, to make your scraping efforts more effective and resilient.

What Is Colly?

Colly is a fast and elegant Gophers crawling framework.Colly provides a clean interface to write any kind of crawler/scraper/spider.With Colly you can easily extract structured data from websites, which can be used for a wide range of applications, like data mining, data processing or archiving.

Certainly! I'll translate the text about User Agents into English and format it appropriately. Here's the translated and formatted version:

What Is Colly User Agent?

User Agent is a special string found in request headers that allows servers to identify the client's operating system and version, browser type and version, and other details.

For normal browsers, the User Agent strings look like this:

-

Google Chrome version 128 on Windows operating system:

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/128.0.0.0 Safari/537.36 -

Firefox:

Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:130.0) Gecko/20100101 Firefox/130.0

However, in Colly (a web scraping framework), the default User Agent is:

colly - https://github.com/gocolly/collyIn the context of data scraping, one of the most common anti-scraping measures is to determine whether the request is coming from a normal browser by examining the User Agent. This helps in identifying bots.

Colly's default User Agent is obviously equivalent to directly telling the target website: "I am a bot." This makes it easy for websites to detect and potentially block scraping attempts using Colly with its default settings.

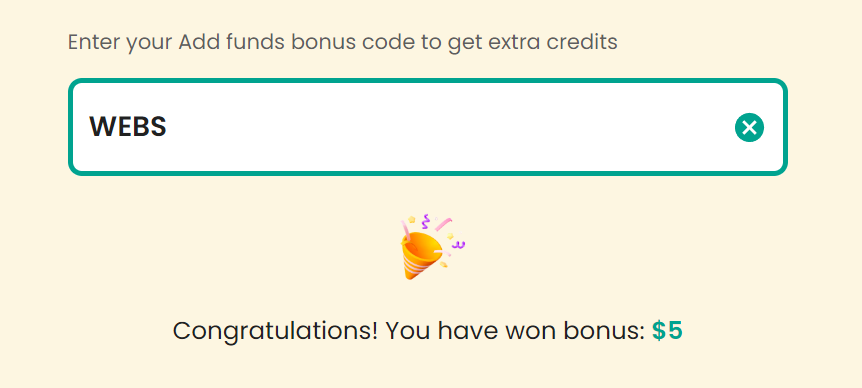

Bonus Code

Claim Your Bonus Code for top captcha solutions; CapSolver: WEBS. After redeeming it, you will get an extra 5% bonus after each recharge, Unlimited

Why Change the User-Agent?

Before diving into the code, let's take a quick look at why changing the User-Agent is crucial:

- Avoid Detection: Many websites use anti-bot mechanisms that analyze incoming User-Agent strings to detect suspicious or repetitive patterns. If your scraper sends the same User-Agent in every request, it becomes an easy target for detection.

- Mimic Real Browsers: By changing the User-Agent string, your scraper can mimic real browsers such as Chrome, Firefox, or Safari, making it less likely to be flagged as a bot.

- Enhance User Experience and Solve CAPTCHA: Many websites use CAPTCHA challenges to verify that a user is not a bot, ensuring a more secure browsing experience. However, for automation tasks, these challenges can interrupt the workflow. If your scraper encounters such CAPTCHA challenges, you can integrate tools like CapSolver to automatically solve them, allowing your automation to continue smoothly without interruptions.

How to Set Up Custom User Agent in Colly

Certainly! I'll translate the text into English and format it appropriately. Here's the translated and formatted version:

Handling User Agents in Colly

We can check the value of our User Agent by visiting https://httpbin.org/user-agent. Colly primarily provides three methods to handle requests:

- Visit: Access the target website

- OnResponse: Process the response content

- OnError: Handle request errors

Here's a complete code example to access httpbin and print the User Agent:

go

package main

import (

"github.com/gocolly/colly"

"log"

)

func main() {

// Create a new collector

c := colly.NewCollector()

// Call the onResponse callback and print the HTML content

c.OnResponse(func(r *colly.Response) {

log.Println(string(r.Body))

})

// Handle request errors

c.OnError(func(e *colly.Response, err error) {

log.Println("Request failed, err:", err)

})

// Start scraping

err := c.Visit("https://httpbin.org/user-agent")

if err != nil {

log.Fatal(err)

}

}This will output:

json

{

"user-agent": "colly - https://github.com/gocolly/colly"

}Customizing User Agents

Colly provides the colly.UserAgent method to customize the User Agent. If you want to use different User Agents for each request, you can define a list of User Agents and randomly select from it. Here's an example:

go

package main

import (

"github.com/gocolly/colly"

"log"

"math/rand"

)

var userAgents = []string{

"Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:130.0) Gecko/20100101 Firefox/130.0",

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/128.0.0.0 Safari/537.36",

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/128.0.0.0 Safari/537.36 Edg/128.0.0.0",

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/127.0.0.0 Safari/537.36 OPR/113.0.0.0",

}

func main() {

// Create a new collector

c := colly.NewCollector(

// Set the user agent

colly.UserAgent(userAgents[rand.Intn(len(userAgents))]),

)

// Call the onResponse callback and print the HTML content

c.OnResponse(func(r *colly.Response) {

log.Println(string(r.Body))

})

// Handle request errors

c.OnError(func(e *colly.Response, err error) {

log.Println("Request failed, err:", err)

})

// Start scraping

err := c.Visit("https://httpbin.org/user-agent")

if err != nil {

log.Fatal(err)

}

}Using fake-useragent Library

Instead of maintaining a custom User Agent list, we can use the fake-useragent library to generate random User Agents. Here's an example:

go

package main

import (

browser "github.com/EDDYCJY/fake-useragent"

"github.com/gocolly/colly"

"log"

)

func main() {

// Create a new collector

c := colly.NewCollector(

// Set the user agent

colly.UserAgent(browser.Random()),

)

// Call the onResponse callback and print the HTML content

c.OnResponse(func(r *colly.Response) {

log.Println(string(r.Body))

})

// Handle request errors

c.OnError(func(e *colly.Response, err error) {

log.Println("Request failed, err:", err)

})

// Start scraping

err := c.Visit("https://httpbin.org/user-agent")

if err != nil {

log.Fatal(err)

}

}Integrating CapSolver

While randomizing User Agents in Colly can help avoid being identified as a bot to some extent, it may not be sufficient when facing more sophisticated anti-bot challenges. Examples of such challenges include reCAPTCHA, Cloudflare Turnstile, and others. These systems check the validity of your request headers, verify your browser fingerprint, assess the risk of your IP, and may require complex JS encryption parameters or difficult image recognition tasks.

These challenges can significantly hinder your data scraping efforts. However, there's no need to worry - all of the aforementioned bot challenges can be handled by CapSolver. CapSolver uses AI-based Auto Web Unblock technology to automatically solve CAPTCHAs. All complex tasks can be successfully resolved within seconds.

The official website provides SDKs in multiple languages, making it easy to integrate into your project. You can refer to the CapSolver documentation for more information on how to implement this solution in your scraping projects.

Certainly! Here's a conclusion for the article on changing User Agents in Go Colly:

Conclusion

Changing the User-Agent in Go Colly is a crucial technique for effective and resilient web scraping. By implementing custom User-Agents, you can significantly reduce the risk of your scraper being detected and blocked by target websites. Here's a summary of the key points we've covered:

-

We've learned why changing the User-Agent is important for web scraping projects.

-

We've explored different methods to set custom User-Agents in Colly, including:

- Using a predefined list of User-Agents

- Implementing random selection from this list

- Utilizing the fake-useragent library for more diverse options

-

We've discussed how these techniques can help mimic real browser behavior and avoid detection.

-

For more advanced anti-bot challenges, we've introduced the concept of using specialized tools like CapSolver to handle CAPTCHAs and other complex verification systems.

Remember, while changing User-Agents is an effective strategy, it's just one part of responsible and efficient web scraping. Always respect websites' terms of service and robots.txt files, implement rate limiting, and consider the ethical implications of your scraping activities.

By combining these techniques with other best practices in web scraping, you can create more robust and reliable scrapers using Go Colly. As web technologies continue to evolve, staying updated with the latest scraping techniques and tools will be crucial for maintaining the effectiveness of your web scraping projects.

Note on Compliance

Important: When engaging in web scraping, it's crucial to adhere to legal and ethical guidelines. Always ensure that you have permission to scrape the target website, and respect the site's

robots.txtfile and terms of service. CapSolver firmly opposes the misuse of our services for any non-compliant activities. Misuse of automated tools to bypass CAPTCHAs without proper authorization can lead to legal consequences. Make sure your scraping activities are compliant with all applicable lcaptcha and regulations to avoid potential issues.

Compliance Disclaimer: The information provided on this blog is for informational purposes only. CapSolver is committed to compliance with all applicable laws and regulations. The use of the CapSolver network for illegal, fraudulent, or abusive activities is strictly prohibited and will be investigated. Our captcha-solving solutions enhance user experience while ensuring 100% compliance in helping solve captcha difficulties during public data crawling. We encourage responsible use of our services. For more information, please visit our Terms of Service and Privacy Policy.

More

How to Solve CAPTCHAs in Python Using Botasaurus and CapSolver (Full Guide)

Learn to integrate Botasaurus (Python web scraping framework) with CapSolver API to automatically solve reCAPTCHA v2/v3 and Turnstile.

Lucas Mitchell

12-Dec-2025

What are 402, 403, 404, and 429 Errors in Web Scraping? A Comprehensive Guide

Master web scraping error handling by understanding what are 402, 403, 404, and 429 errors. Learn how to fix 403 Forbidden, implement rate limiting error 429 solutions, and handle the emerging 402 Payment Required status code.

Sora Fujimoto

11-Dec-2025

Best Web Scraping APIs in 2026: Top Tools Compared & Ranked

Discover the best Web Scraping APIs for 2026. We compare the top tools based on success rate, speed, AI features, and pricing to help you choose the right solution for your data extraction needs.

Ethan Collins

11-Dec-2025

CapSolver Extension: Effortlessly Solve Image Captcha and ImageToText Challenges in Your Browser

Use the CapSolver Chrome Extension for AI-powered, one-click solving of Image Captcha and ImageToText challenges directly in your browser.

Lucas Mitchell

11-Dec-2025

Cloudflare Challenge vs Turnstile: Key Differences and How to Identify Them

nderstand the key differences between Cloudflare Challenge vs Turnstile and learn how to identify them for successful web automation. Get expert tips and a recommended solver.

Lucas Mitchell

10-Dec-2025

How to Solve AWS Captcha / Challenge with PHP: A Comprehensive Guide

A detailed PHP guide to solving AWS WAF CAPTCHA and Challenge for reliable scraping and automation

Rajinder Singh

10-Dec-2025