How to Use aiohttp for Web Scraping

Lucas Mitchell

Automation Engineer

23-Sep-2024

What is aiohttp?

aiohttp is a powerful asynchronous HTTP client/server framework for Python. It leverages Python's asyncio library to enable concurrent network operations, making it highly efficient for tasks like web scraping, web development, and any network-bound operations.

Features:

- Asynchronous I/O: Built on top of

asynciofor non-blocking network operations. - Client and Server Support: Provides both HTTP client and server implementations.

- WebSockets Support: Native support for WebSockets protocols.

- High Performance: Efficient handling of multiple connections simultaneously.

- Extensibility: Supports middlewares, signals, and plugins for advanced customization.

Prerequisites

Before you start using aiohttp, ensure you have:

- Python 3.7 or higher

- pip for installing Python packages

Getting Started with aiohttp

Installation

Install aiohttp using pip:

bash

pip install aiohttpBasic Example: Making a GET Request

Here's how to perform a simple GET request using aiohttp:

python

import asyncio

import aiohttp

async def fetch(url):

async with aiohttp.ClientSession() as session:

async with session.get(url) as response:

status = response.status

text = await response.text()

print(f'Status Code: {status}')

print('Response Body:', text)

if __name__ == '__main__':

asyncio.run(fetch('https://httpbin.org/get'))Web Scraping Example: Scraping Quotes from a Website

Let's scrape the Quotes to Scrape website to extract quotes and their authors:

python

import asyncio

import aiohttp

from bs4 import BeautifulSoup

async def fetch_content(url):

async with aiohttp.ClientSession() as session:

async with session.get(url) as response:

return await response.text()

async def scrape_quotes():

url = 'http://quotes.toscrape.com/'

html = await fetch_content(url)

soup = BeautifulSoup(html, 'html.parser')

quotes = soup.find_all('div', class_='quote')

for quote in quotes:

text = quote.find('span', class_='text').get_text(strip=True)

author = quote.find('small', class_='author').get_text(strip=True)

print(f'{text} — {author}')

if __name__ == '__main__':

asyncio.run(scrape_quotes())Output:

“The world as we have created it is a process of our thinking. It cannot be changed without changing our thinking.” — Albert Einstein

“It is our choices, Harry, that show what we truly are, far more than our abilities.” — J.K. Rowling

... (additional quotes)Handling Captchas with CapSolver and aiohttp

In this section, we'll explore how to integrate CapSolver with aiohttp to bypass captchas. CapSolver is an external service that helps in solving various types of captchas, including ReCaptcha v2, v3

We'll demonstrate solving ReCaptcha V2 using CapSolver and then accessing a page that requires captcha solving.

Example: Solving ReCaptcha V2 with CapSolver and aiohttp

First, install the CapSolver package:

bash

pip install capsolverNow, here's how you can solve a ReCaptcha V2 and use the solution in your request:

python

import asyncio

import os

import aiohttp

import capsolver

# Set your CapSolver API key

capsolver.api_key = os.getenv("CAPSOLVER_API_KEY", "Your CapSolver API Key")

PAGE_URL = os.getenv("PAGE_URL", "https://example.com") # Page URL with captcha

SITE_KEY = os.getenv("SITE_KEY", "SITE_KEY") # Captcha site key

async def solve_recaptcha_v2():

solution = capsolver.solve({

"type": "ReCaptchaV2TaskProxyless",

"websiteURL": PAGE_URL,

"websiteKey": SITE_KEY

})

return solution['solution']['gRecaptchaResponse']

async def access_protected_page():

captcha_response = await solve_recaptcha_v2()

print("Captcha Solved!")

async with aiohttp.ClientSession() as session:

data = {

'g-recaptcha-response': captcha_response,

# Include other form data if required by the website

}

async with session.post(PAGE_URL, data=data) as response:

content = await response.text()

print('Page Content:', content)

if __name__ == '__main__':

asyncio.run(access_protected_page())Note: Replace PAGE_URL with the URL of the page containing the captcha and SITE_KEY with the site key of the captcha. The site key is usually found in the page's HTML source code within the captcha widget.

Handling Proxies with aiohttp

To route your requests through a proxy, specify the proxy parameter:

python

import asyncio

import aiohttp

async def fetch(url, proxy):

async with aiohttp.ClientSession() as session:

async with session.get(url, proxy=proxy) as response:

return await response.text()

async def main():

proxy = 'http://username:password@proxyserver:port'

url = 'https://httpbin.org/ip'

content = await fetch(url, proxy)

print('Response Body:', content)

if __name__ == '__main__':

asyncio.run(main())Handling Cookies with aiohttp

You can manage cookies using CookieJar:

python

import asyncio

import aiohttp

async def main():

jar = aiohttp.CookieJar()

async with aiohttp.ClientSession(cookie_jar=jar) as session:

await session.get('https://httpbin.org/cookies/set?name=value')

# Display the cookies

for cookie in jar:

print(f'{cookie.key}: {cookie.value}')

if __name__ == '__main__':

asyncio.run(main())Advanced Usage: Custom Headers and POST Requests

You can send custom headers and perform POST requests with aiohttp:

python

import asyncio

import aiohttp

async def main():

headers = {

'User-Agent': 'Mozilla/5.0 (compatible)',

'Accept-Language': 'en-US,en;q=0.5',

}

data = {

'username': 'testuser',

'password': 'testpass',

}

async with aiohttp.ClientSession() as session:

async with session.post('https://httpbin.org/post', headers=headers, data=data) as response:

json_response = await response.json()

print('Response JSON:', json_response)

if __name__ == '__main__':

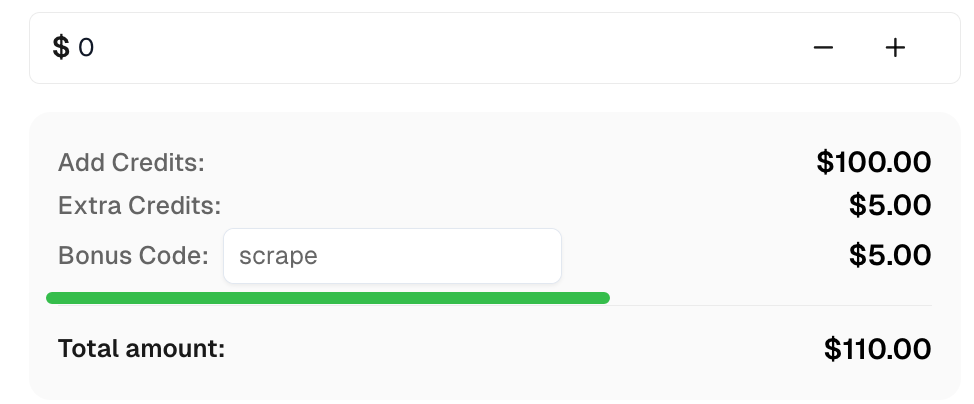

asyncio.run(main())Bonus Code

Claim your Bonus Code for top captcha solutions at CapSolver: scrape. After redeeming it, you will get an extra 5% bonus after each recharge, unlimited times.

Conclusion

With aiohttp, you can efficiently perform asynchronous web scraping tasks and handle multiple network operations concurrently. Integrating it with CapSolver allows you to solve captchas like ReCaptcha V2, enabling access to content that might otherwise be restricted.

Feel free to expand upon these examples to suit your specific needs. Always remember to respect the terms of service of the websites you scrape and adhere to legal guidelines.

Happy scraping!

Compliance Disclaimer: The information provided on this blog is for informational purposes only. CapSolver is committed to compliance with all applicable laws and regulations. The use of the CapSolver network for illegal, fraudulent, or abusive activities is strictly prohibited and will be investigated. Our captcha-solving solutions enhance user experience while ensuring 100% compliance in helping solve captcha difficulties during public data crawling. We encourage responsible use of our services. For more information, please visit our Terms of Service and Privacy Policy.

More

Instant Data Scraper Tools: Fast Ways to Extract Web Data Without Code

Discover the best instant data scraper tools for 2026. Learn fast ways to extract web data without code using top extensions and APIs for automated extraction.

Emma Foster

27-Jan-2026

Browser Use vs Browserbase: Which Browser Automation Tool Is Better for AI Agents?

Compare Browser Use vs Browserbase for AI agent automation. Discover features, pricing, and how to solve CAPTCHAs with CapSolver for seamless workflows.

Anh Tuan

27-Jan-2026

IP Bans in 2026: How They Work and Practical Ways to Bypass Them

Learn how to bypass ip ban in 2026 with our comprehensive guide. Discover modern IP blocking techniques and practical solutions like residential proxies and CAPTCHA solvers.

Lucas Mitchell

26-Jan-2026

Web Scraping News Articles with Python (2026 Guide)

Master web scraping news articles with Python in 2026. Learn to solve reCAPTCHA v2/v3 with CapSolver, and build scalable data pipelines.

Ethan Collins

26-Jan-2026

How to Solve Captcha in Pydoll with CapSolver Integration

Learn how to solve reCAPTCHA and Cloudflare Turnstile in Pydoll using CapSolver for stealthy, async, CDP-based browser automation.

Lucas Mitchell

23-Jan-2026

The Best API Search Company’s Homepage: A Powerful Guide for Smarter Data Discovery

Evaluate the best api search company's homepage with our expert guide. Learn to assess technical transparency, developer experience, and core features for smarter data discovery and reliable API integration.

Nikolai Smirnov

23-Jan-2026