Web Scraping Challenges and How to Solve it

Ethan Collins

Pattern Recognition Specialist

29-Mar-2024

The internet is a vast repository of data, but harnessing its true potential can be challenging. Whether it's dealing with data in an unstructured format, navigating limitations imposed by websites, or encountering various obstacles, accessing and utilizing web data effectively requires overcoming significant hurdles. This is where web search becomes invaluable. By automating the extraction and processing of unstructured web content, one can compile extensive datasets that provide valuable insights and a competitive edge.

However, web data enthusiasts and professionals encounter numerous challenges in this dynamic online landscape. In this article, we will explore the top 5 web search challenges that both beginners and experts must be aware of. Moreover, we will delve into the most effective solutions to overcome these difficulties.

Let's delve deeper into the world of web search and discover how to conquer these challenges!

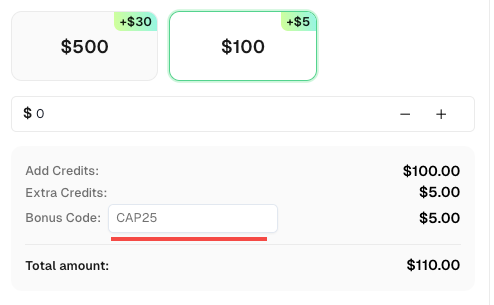

Bonus Code

Don’t miss the chance to further optimize your operations! Use the bonus code CAP25 when topping up your CapSolver account and receive an extra 5% bonus on each recharge, with no limits. Visit the CapSolver Dashboard to redeem your bonus now!

IP Blocking

To prevent abuse and unauthorized web scraping, websites often employ blocking measures that rely on unique identifiers like IP addresses. When certain limits are exceeded or suspicious activities are detected, the website may ban the associated IP address, effectively preventing automated scraping.

Websites may also implement geo-blocking, which blocks IPs based on their geographical location, as well as other anti-bot measures that analyze IP origin and unusual usage patterns to identify and block IPs.

Solution

Fortunately, there are several solutions to overcome IP blocking. The simplest approach involves adjusting your requests to adhere to the website's limits, controlling the rate of requests and maintaining a natural usage pattern. However, this approach significantly restricts the amount of data that can be scraped within a given timeframe.

A more scalable solution is to utilize a proxy service that incorporates IP rotation and retry mechanisms to evade IP blocking. It's important to note that web scraping using proxies and other circumvention methods may raise ethical concerns. Always ensure compliance with local and international data regulations and carefully review the website's terms of service (TOS) and policies before proceeding.

CAPTCHAs

CAPTCHAs, short for Completely Automated Public Turing Tests to Tell Computers and Humans Apart, serve as a widely used security measure to impede web scrapers from accessing and extracting data from websites.

This system presents challenges that require manual interaction to prove the user's authenticity before granting access to the desired content. These challenges can take various forms, including image recognition, textual puzzles, auditory puzzles, or even analysis of user behavior.

Solution

To overcome CAPTCHAs, one can either solve them or take measures to avoid triggering them. It is generally recommended to opt for the former approach, as it ensures data integrity, increases automation efficiency, provides reliability and stability, and complies with legal and ethical guidelines. Avoiding triggering CAPTCHA may result in incomplete data, increased manual operations, use of non-compliant methods, and exposure to legal and ethical risks. Therefore, addressing CAPTCHA is a more reliable and sustainable approach.

CapSolver, for example, is a third-party service dedicated to solving Captchas. It offers an API that can be integrated directly into scraping scripts or applications.

By outsourcing Captcha solving to services like Capsolver, you can streamline the scraping process and reduce manual intervention.

Rate Limiting

Rate limiting is a method employed by websites to protect against abuse and different types of attacks. It sets limits on the number of requests a client can make within a given time frame. If the limit is exceeded, the website may throttle or block the requests using techniques such as IP blocking or CAPTCHA.

Rate limiting primarily focuses on identifying individual clients and monitoring their usage to ensure they stay within the set limits. Identification can be based on the client's IP address or utilize techniques like browser fingerprinting, which involves detecting unique client features. User-agent strings and cookies may also be examined as part of the identification process.

Solution

There are several ways to get over rate limits. One simple approach is to control the frequency and timing of your requests to mimic more human-like behavior. This can include introducing random delays or retries between requests. Other solutions involve rotating your IP address and customizing various properties, such as the user-agent string and browser fingerprint.

Honeypot Traps

Honeypot traps pose a significant challenge for web scraping bots, as they are specifically designed to deceive automated scripts. These traps involve the inclusion of hidden elements or links that are intended to be accessed only by bots.

The purpose of honeypot traps is to identify and block scraping activities, as real users would not interact with these hidden elements. When a scraper encounters and interacts with these traps, it raises a red flag, potentially leading to the scraper being banned from the website.

Solution

To overcome this challenge, it is crucial to be vigilant and avoid falling into honeypot traps. One effective strategy is to identify and avoid hidden links. These links are typically configured with CSS properties such as display: none or visibility: hidden, making them invisible to human users but detectable by scraping bots.

By carefully analyzing the HTML structure and CSS properties of the web pages you are scraping, you can exclude or bypass these hidden links. This way, you can minimize the risk of triggering honeypot traps and maintain the integrity and stability of your scraping process.

It is important to note that respecting website policies and terms of service is essential when engaging in web scraping activities. Always ensure that your scraping activities align with the ethical and legal guidelines set by the website owners.

Dynamic Content

In addition to rate limiting and blocking, web scraping presents challenges related to detecting and handling dynamic content.

Modern websites often incorporate a significant amount of JavaScript to enhance interactivity and dynamically render various parts of the user interface, additional content, or even entire pages.

With the prevalence of single-page applications (SPAs), JavaScript plays a crucial role in rendering almost every aspect of the website. Additionally, other types of web applications utilize JavaScript to asynchronously load content, allowing features like infinite scroll without the need for page refresh or reload. In such cases, parsing the HTML alone is insufficient.

To successfully scrape dynamic content, it is necessary to load and process the underlying JavaScript code. However, implementing this correctly in a custom script can be challenging. This is why many developers prefer utilizing headless browsers and web automation tooling such as Playwright, Puppeteer, and Selenium.

By leveraging these tools, you can emulate a browser environment, execute JavaScript, and obtain the fully rendered HTML, including any dynamically loaded content. This approach ensures that you capture all the desired information, even from websites heavily reliant on JavaScript for content generation.

Slow Page Loading

When a website experiences a high volume of concurrent requests, its loading speed can be significantly affected. Factors such as page size, network latency, server performance, and the amount of JavaScript and other resources to load all contribute to this issue.

Slow page loading can cause delays in data retrieval for web scraping. This can slow down the entire scraping project, especially when dealing with multiple pages. It can also lead to timeouts, unpredictable scraping times, incomplete data extraction, or incorrect data if certain page elements fail to load properly.

Solution

To address this challenge, it is recommended to use headless browsers like Selenium or Puppeteer. These tools allow you to ensure that a page is fully loaded before extracting data, avoiding incomplete or inaccurate information. Setting up timeouts, retries, or refreshes, and optimizing your code can also help mitigate the impact of slow page loading.

Conclusion

We face several challenges when it comes to web scraping. These challenges include IP blocking, CAPTCHA verification, rate limiting, honeypot traps, dynamic content, and slow page loading. However, we can overcome these challenges by using proxies, solving CAPTCHAs, controlling request frequency, avoiding traps, leveraging headless browsers, and optimizing our code. By addressing these obstacles, we can improve our web scraping efforts, gather valuable information, and ensure compliance.

FAQ: Common Questions About Web Scraping Challenges

1. What is web scraping and why is it important?

Web scraping is the automated process of collecting and extracting data from websites. It’s widely used for market research, SEO tracking, data analysis, and machine learning. Efficient scraping helps businesses gain insights and maintain a competitive edge.

2. Why do websites block web scrapers?

Websites block scrapers to prevent misuse, protect server performance, and secure private data. Common anti-bot methods include IP blocking, CAPTCHA verification, and JavaScript fingerprinting.

3. How can I solve CAPTCHA during web scraping?

You can use third-party CAPTCHA solving services like CapSolver. It provides APIs to automatically solve reCAPTCHA, hCaptcha, and other CAPTCHA types, ensuring uninterrupted data collection.

4. What’s the best way to avoid IP blocking when scraping websites?

Use rotating proxies and control your request rate. Sending too many requests in a short time can trigger rate limits or bans. Residential proxies and ethical scraping practices are strongly recommended.

5. How do I handle dynamic or JavaScript-heavy content?

Modern websites often use JavaScript frameworks like React or Vue, which dynamically load content. Tools like Puppeteer, Playwright, or Selenium simulate a browser environment to render and scrape full page data effectively.

6. Are there legal or ethical concerns with web scraping?

Yes. Always comply with website Terms of Service (ToS) and data privacy laws (like GDPR or CCPA). Focus on publicly available data and avoid scraping sensitive or restricted information.

7. How can I speed up slow web scraping projects?

Optimize your scripts by setting proper timeouts, caching results, and using asynchronous requests. Also, solving CAPTCHAs efficiently with CapSolver and using fast proxies can reduce delays and improve stability.

Compliance Disclaimer: The information provided on this blog is for informational purposes only. CapSolver is committed to compliance with all applicable laws and regulations. The use of the CapSolver network for illegal, fraudulent, or abusive activities is strictly prohibited and will be investigated. Our captcha-solving solutions enhance user experience while ensuring 100% compliance in helping solve captcha difficulties during public data crawling. We encourage responsible use of our services. For more information, please visit our Terms of Service and Privacy Policy.

More

Top 10 Data Collection Methods for AI and Machine Learning

Discover the 10 best data collection methods for AI and ML, focusing on Throughput, Cost, and Scalability. Learn how CapSolver's AI-powered captcha solving ensures stable data acquisition for your projects.

Sora Fujimoto

22-Dec-2025

What Is CAPTCHA and How to Solve It: Simple Guide for 2026

Tired of frustrating CAPTCHA tests? Learn what CAPTCHA is, why it's essential for web security in 2026, and the best ways to solve it fast. Discover advanced AI-powered CAPTCHA solving tools like CapSolver to bypass challenges seamlessly.

Anh Tuan

05-Dec-2025

Web scraping with Cheerio and Node.js 2026

Web scraping with Cheerio and Node.js in 2026 remains a powerful technique for data extraction. This guide covers setting up the project, using Cheerio's Selector API, writing and running the script, and handling challenges like CAPTCHAs and dynamic pages.

Ethan Collins

20-Nov-2025

Best Captcha Solving Service 2026, Which CAPTCHA Service Is Best?

Compare the best CAPTCHA solving services for 2026. Discover CapSolver's cutting-edge AI advantage in speed, 99%+ accuracy, and compatibility with Captcha Challenge

Lucas Mitchell

30-Oct-2025

Web Scraping vs API: Collect data with web scraping and API

Learn the differences between web scraping and APIs, their pros and cons, and which method is best for collecting structured or unstructured web data efficiently.

Rajinder Singh

29-Oct-2025

Auto-Solving CAPTCHAs with Browser Extensions: A Step-by-Step Guide

Browser extensions have revolutionized the way we interact with websites, and one of their remarkable capabilities is the ability to auto-solve CAPTCHAs..

Ethan Collins

23-Oct-2025