The 3 Best Programming Languages for Web Scraping

Lucas Mitchell

Automation Engineer

19-Oct-2025

Web scraping has become an essential technique for extracting data from websites in various domains such as research, data analysis, and business intelligence. When it comes to choosing the right programming language for web scraping, there are several options available. In this article, we will explore the three best programming languages for web scraping, considering factors such as ease of use, availability of libraries and frameworks, and community support.

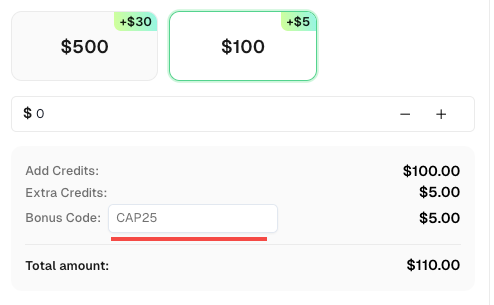

Bonus Code

A bonus code for top captcha solutions; CapSolver Dashboard: CAP25. After redeeming it, you will get an extra 5% bonus after each recharge, Unlimited

JavaScript

JavaScript is a highly versatile and widely adopted programming language, making it an excellent choice for web scraping tasks. It offers a vast range of libraries and tools within its ecosystem and benefits from a supportive and enthusiastic community.

JavaScript's flexibility is a notable advantage for web scraping. It seamlessly integrates with HTML, enabling easy client-side usage. Additionally, with the advent of Node.js, JavaScript can be deployed on the server side as well, providing developers with multiple options for implementation.

In terms of performance, JavaScript has made significant strides to optimize resource usage. Engines like V8 have contributed to improved performance, making JavaScript efficient for web scraping workloads. Its ability to handle asynchronous operations also enables concurrent processing of requests, further enhancing performance for large-scale scraping applications.

JavaScript has a relatively gentle learning curve compared to other languages, making it accessible to both beginner and experienced developers. The language's straightforward syntax and extensive documentation, along with abundant learning resources, contribute to its user-friendly nature.

The JavaScript community is robust and continually growing, offering invaluable support and collaboration opportunities. The vast network of experienced professionals ensures that developers, especially newcomers, can find assistance, troubleshoot issues, and access best practices. This vibrant community fosters innovation and contributes to the evolution of web scraping techniques and solutions.

JavaScript provides a wide range of web scraping libraries that streamline the scraping process and improve efficiency. Libraries such as Axios, Cheerio, Puppeteer, and Playwright offer various features and capabilities to address different scraping requirements. These tools simplify data extraction and manipulation from diverse sources.

Python

Python is undoubtedly the oneof most popular programming language for web scraping, and for good reason. It provides a rich ecosystem of libraries and tools specifically designed for web scraping tasks. One of the key libraries in Python is BeautifulSoup, which simplifies the process of parsing HTML and XML documents. With its intuitive and easy-to-use methods, developers can navigate the website's structure, extract data, and handle complex scraping scenarios.

In addition to BeautifulSoup, Python offers other powerful libraries such as Scrapy and Selenium. Scrapy is a comprehensive web scraping framework that handles the entire scraping process, from requesting web pages to storing extracted data. Selenium is a browser automation tool that enables interaction with web elements, making it ideal for scraping dynamic websites.

Python's versatility extends beyond scraping libraries. It has excellent support for handling HTTP requests with the requests library, enabling developers to retrieve website data efficiently. Moreover, Python's integration capabilities with CAPTCHA-solving tools like CapSolver simplify the process of bypassing CAPTCHAs, making it a go-to choice for scraping websites with CAPTCHA protection.

Here's an example of using Capsolver in Python to solve reCAPTCHA v2:

How to Solve Any CAPTCHA with Capsolver Using Python:

Prerequisites

- A working proxy

- Python installed

- Capsolver API key

🤖 Step 1: Install Necessary Packages

Execute the following commands to install the required packages:

pip install capsolver

Here is an example of reCAPTCHA v2:

👨💻 Python Code for solve reCAPTCHA v2 with your proxy

Here's a Python sample script to accomplish the task:

python

import capsolver

# Consider using environment variables for sensitive information

PROXY = "http://username:password@host:port"

capsolver.api_key = "Your Capsolver API Key"

PAGE_URL = "PAGE_URL"

PAGE_KEY = "PAGE_SITE_KEY"

def solve_recaptcha_v2(url,key):

solution = capsolver.solve({

"type": "ReCaptchaV2Task",

"websiteURL": url,

"websiteKey":key,

"proxy": PROXY

})

return solution

def main():

print("Solving reCaptcha v2")

solution = solve_recaptcha_v2(PAGE_URL, PAGE_KEY)

print("Solution: ", solution)

if __name__ == "__main__":

main()👨💻 Python Code for solve reCAPTCHA v2 without proxy

Here's a Python sample script to accomplish the task:

python

import capsolver

# Consider using environment variables for sensitive information

capsolver.api_key = "Your Capsolver API Key"

PAGE_URL = "PAGE_URL"

PAGE_KEY = "PAGE_SITE_KEY"

def solve_recaptcha_v2(url,key):

solution = capsolver.solve({

"type": "ReCaptchaV2TaskProxyless",

"websiteURL": url,

"websiteKey":key,

})

return solution

def main():

print("Solving reCaptcha v2")

solution = solve_recaptcha_v2(PAGE_URL, PAGE_KEY)

print("Solution: ", solution)

if __name__ == "__main__":

main()Ruby

Ruby, known for its simplicity and readability, is also a viable language for web scraping. It offers an elegant and expressive syntax that allows developers to write concise scraping scripts. Ruby's Nokogiri library is widely used for parsing HTML and XML documents, providing similar functionality to Python's BeautifulSoup. Nokogiri's intuitive API enables developers to traverse the document structure, extract data, and manipulate web elements with ease.

Additionally, Ruby has the Mechanize gem, which simplifies the process of interacting with websites. Mechanize handles tasks such as submitting forms, managing cookies, and handling redirects, making it an excellent choice for scraping websites that involve complex interactions.

Ruby's clean and expressive code, coupled with the power of Nokogiri and Mechanize, make it a solid option for web scraping projects.

Conclusion

In conclusion, Python, JavaScript, and Ruby are three of the best programming languages for web scraping. Python's extensive libraries, such as BeautifulSoup, Scrapy, and Selenium, make it a popular choice for a wide range of scraping tasks. JavaScript, with frameworks like Puppeteer, excels at scraping dynamic websites that heavily rely on client-side rendering. Ruby's simplicity and the capabilities of libraries like Nokogiri and Mechanize make it a reliable choice for web scraping.

When choosing a programming language for web scraping, consider the specific requirements of your project, the complexity of the target websites, and your familiarity with the language. Remember to always respect the terms of service and legal restrictions of the websites you scrape.

Compliance Disclaimer: The information provided on this blog is for informational purposes only. CapSolver is committed to compliance with all applicable laws and regulations. The use of the CapSolver network for illegal, fraudulent, or abusive activities is strictly prohibited and will be investigated. Our captcha-solving solutions enhance user experience while ensuring 100% compliance in helping solve captcha difficulties during public data crawling. We encourage responsible use of our services. For more information, please visit our Terms of Service and Privacy Policy.

More

How to Solve CAPTCHAs in Python Using Botasaurus and CapSolver (Full Guide)

Learn to integrate Botasaurus (Python web scraping framework) with CapSolver API to automatically solve reCAPTCHA v2/v3 and Turnstile.

Lucas Mitchell

12-Dec-2025

What are 402, 403, 404, and 429 Errors in Web Scraping? A Comprehensive Guide

Master web scraping error handling by understanding what are 402, 403, 404, and 429 errors. Learn how to fix 403 Forbidden, implement rate limiting error 429 solutions, and handle the emerging 402 Payment Required status code.

Sora Fujimoto

11-Dec-2025

Best Web Scraping APIs in 2026: Top Tools Compared & Ranked

Discover the best Web Scraping APIs for 2026. We compare the top tools based on success rate, speed, AI features, and pricing to help you choose the right solution for your data extraction needs.

Ethan Collins

11-Dec-2025

Web Crawling vs. Web Scraping: The Essential Difference

Uncover the essential difference between web crawling and web scraping. Learn their distinct purposes, 10 powerful use cases, and how CapSolver helps bypass AWS WAF and CAPTCHA blocks for seamless data acquisition.

Sora Fujimoto

09-Dec-2025

How to Solve Captchas When Web Scraping with Scrapling and CapSolver

Scrapling + CapSolver enables automated scraping with ReCaptcha v2/v3 and Cloudflare Turnstile bypass.

Ethan Collins

04-Dec-2025

How to Make an AI Agent Web Scraper (Beginner-Friendly Tutorial)

Learn how to make an AI Agent Web Scraper from scratch with this beginner-friendly tutorial. Discover the core components, code examples, and how to bypass anti-bot measures like CAPTCHAs for reliable data collection.

Lucas Mitchell

02-Dec-2025